| ||||

| Contents | ||||

| Editor's Notes | ||||

This is the last Embedded MuseAfter 500 issues and 27 years it's time to wrap up the Embedded Muse. It's said there's some wisdom in knowing when it is time to move on, and for me that time is now. I mostly retired a year or two ago, and will now change that "mostly" to "completely". To misquote Douglas MacArthur, who gave his farewell address to Congress at the same age I am now, like an old soldier, it's time for me to pack up my oscilloscope and fade away. Thanks to all of you loyal readers for your comments, insight, corrections and feedback over the years. The best part of running the Muse has been the dialog with you. I've learned much from our interactions, and greatly enjoyed the give and take. And thanks to the advertisers, particularly SEGGER and Percepio, who made this newsletter possible. Back issues of the Muse remain available here. This last Muse has three articles:

|

||||

| Quotes and Thoughts | ||||

|

“Program testing can at best show the presence of errors, but never their absence.” Edsger Dijkstra |

||||

| What We Must Learn From the 737 Max | ||||

|

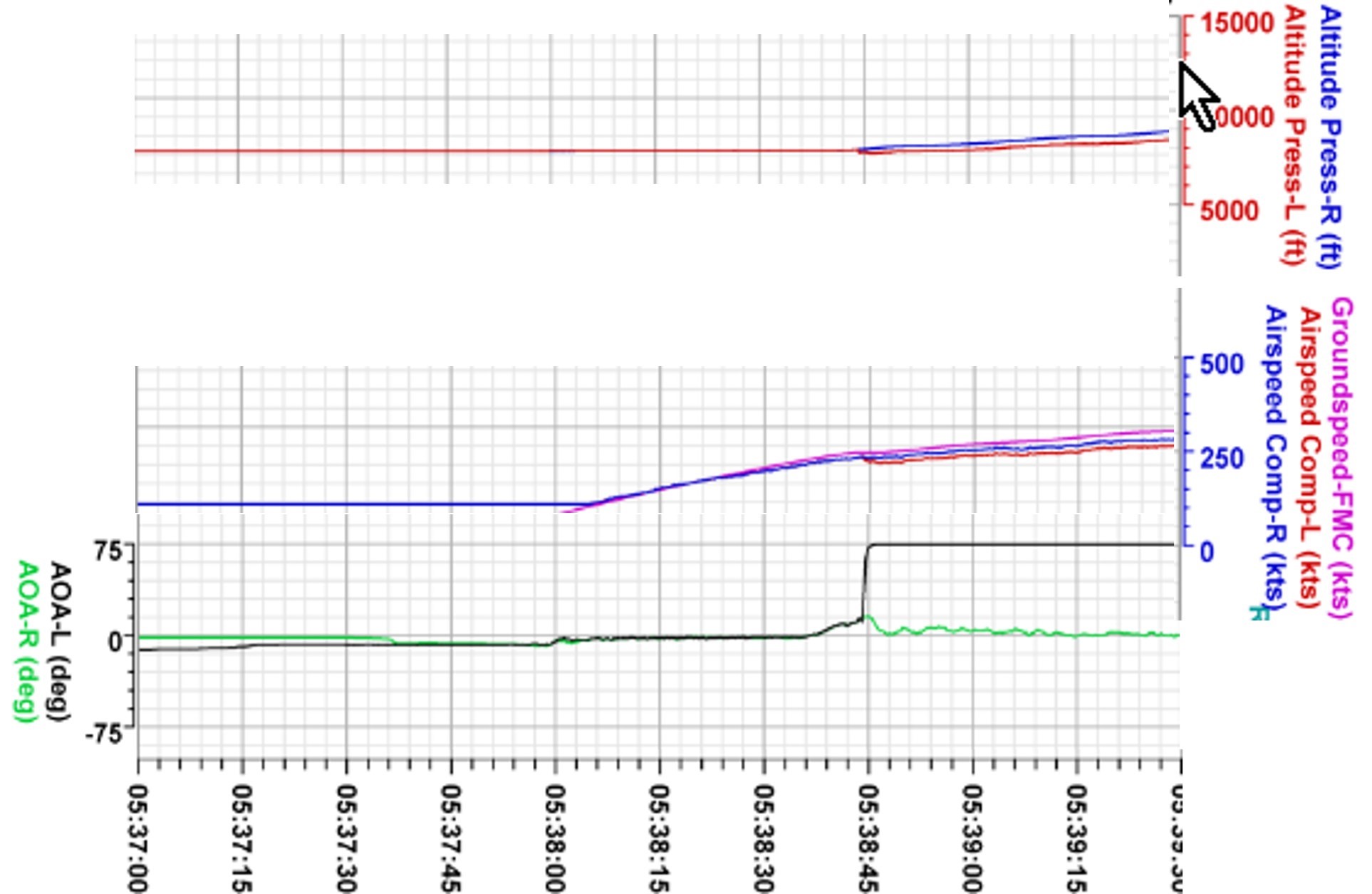

Air disasters are tragic, but are also, if we're wise, a source of important lessons. The twin crashes of the 737 Max in 2018 and 2019 offer stark warnings for all embedded developers. In my opinion the code - like so much other embedded software - mishandled the sensors. Do think about this and feed the lessons into your work. The short version: The code read the angle of attack sensor, which had failed, and produced a result that was bogus. The computer assumed the data was correct and initiated actions that resulted in failure. Don't believe inputs. Hold them to scrutiny against other data and the known science. There's plenty to pick apart in these crashes, but my focus is on how the code handled input data. The angle-of-attack sensor (AoA) measures how far the aircraft is from level flight. The 737 Max has two of these; why only one was used is a good question, but goes beyond this discussion. The following figure is adapted from the preliminary report of the Ethiopian Airlines accident. Interestingly, the flight data recorder had 1790 channels of data. First fail: The left AoA sensor went from about zero degrees (level flight) to 75 degrees (apparently the maximum that the sensor can report) in one sampling interval (one second). That is, the sensor reported the aircraft pitched up to nearly vertical flight in a second or less. The software should have looked at the AoA sensor rate of change and said "75 degrees in a second or less in a hundred ton aircraft? Unlikely." The very physics involved should have signaled a warning that the data was no good. Second fail: Two channels show the altitude virtually unchanged. Aim an aircraft up, and it will either rapidly ascend, or stall and fall out of the sky. The altitude data shows neither of these conditions. The code should have compared the strange AoA indication to a change in altitude. Again, the physics was clear, and other sensors gave lie to the AoA. Third fail: Three other channels show the airspeed remaining constant or increasing slightly. Three channels reported no real airspeed change. Rotate the aircraft to an almost vertical inclination and the airspeed will plummet. Unless you're flying an X-15. Why didn't the system compare the almost certainly bogus AoA data (due to it's unlikely rapid change) to any of the three airspeed datastreams? The software had lots of inputs that suggested the AoA sensor wasn't working properly. Alas, it ignored that data and believed the failed sensor. I believe we all need to think about this. When you're reading data, ensure it makes sense. Perhaps by comparing a reading to what you know the physics or common sense permits. A thermometer outside a bank should never read 300 degrees. Or -100. An AoA that reads 75 degrees on a commercial airplane is most likely in error. At the very least the code should say "that's strange; let's see if it makes sense." Or, compare to previous readings and reject outliers. If the input stream is 24, 23, 25, 26, 22, 24, 25, 90 I'd be mighty suspicious of that last number. The 737 Max's AoA sensor was basically giving 0, 0, 0, 0, 0, 0, 0, 75. Sometimes we average an input stream. That's generally a bad idea, as a single out-of-range reading can skew that average. Reject readings outside some limit before summing it into the average. That can be a simple comparison against a mean, or a comparison to a standard deviation, or, as shown in Muse 495, something much more robust. Make use of other information that might be present; in the case of the Max many other channels were saying "we're flying straight and level." CPU cycles are plentiful. Use them to build robust and reliable systems. Never assume an input will be correct. |

||||

| Some Advice | ||||

|

A few thoughts from an old embedded guy:

|

||||

| A Look Back | ||||

We live in the best of times, especially for technology people. It's impossible to imaging not owning computers, of not being connected to a super-high-speed Internet. It wasn't always that way, and here's a little retrospective from over a half-century working in the embedded world. When my dad went to MIT to study mechanical engineering, he figured with a slide rule. Twenty-five years later we engineering students at the U of MD used slide rules as calculators were quite unaffordable. We had one computer on campus, a $10 million (around $100m in today's dollars) Univac 1108. My kids were required to own laptops when they went to college. In a single generation we went from $10m computers to using them for doorstops. Around 1971 I desperately wanted a computer, so designed a 12 bit machine using hundreds of TTL chips and a salvaged Model-15 teletype. It worked - even better, being fully static, I could clock it at 1 Hz and use a VOM to debug it. But then Intel announced the 4004, quickly followed by the first commercial 8-bit microprocessor, the 8008. It was hardly a computer on a chip as plenty of external components were needed. And at $120 ($900 in today's bucks) just for the chip, this was a pricey part. But suddenly a huge new market was born: the embedded system. (Actually, people had been embedding minicomputers into instruments before this, but the costs were staggering). I had been working as an electronics technician while in college, but the company found itself in a quandary: clearly their analog instruments were to become obsoleted by digital technology, but the engineers didn't know anything about computers. Somehow I got promoted to engineer. There were no IDEs or GUIs then. Our development system was an Intellec 8, an 8008-based computer from Intel. We removed the CPU board from our instrument and cabled a 100 pin flat ribbon cable from the Intellec to our bus; the Intellec then taking place of our CPU. Can you imaging running a bus over a couple of feet of cable today? At 0.8 MHz, that was not much of a problem. An ASR-33 teletype with a 10 character-per-second paper tape reader and punch interfaced to the Intellec. Development was straightforward: Punch instructions into the Intellec's front panel to jump to the ROM loader (44 00 01 - I still remember these!). Load the big loader paper tape, suck that in. Then load the editor tape. Create a module in assembly language and punch the tape. Load the assembler tape. Load the module tape - three times, as it was a three-pass assembler. If no errors, punch a binary. Iterate for each module. Load the linker tape. Load each binary tape. Punch a final binary. Re-assembling took three days. A primitive debugger supported several software breakpoints and let us examine/modify memory and registers. Re-assembly was so painful we'd plug in machine code patches, documenting these changes on the TTY's listings. The code had to do multiple linear regressions so we found a marvelous floating point package by a Cal Ohne which fit into 1kB. In the years since I've tried to track him down with no success. The binary was just 4KB, but EPROMs were small. A 1702A could hold 256 bytes, so a board full of 16 were required. Those parts were $100 a pop; that board would have been $15k today. Eventually we got a 200 CPS tape reader, then moved to an MDS-800 "Blue Box" with twin 80KB floppies. We paid $20K for that system; in today's dollars one could get a house in some areas for that sum. How things have changed! A hundred bucks buys more disk storage than possibly existed in the world 50 years ago. The 8008 might have squeaked out 100 FLOP/s; my Core I9 PC is rated at 109 GFLOP/s, yet the entire computer, with skads of RAM, disk, etc., was just a couple of $K. In the early 90s I started one of Maryland's first ISPs - we paid $5000/month for a T1 (1.5 MB/s) link to the net. Today Xfinity gets me for $100/mo for 500 MB/s. Remember the spinning tape drives on mainframes? My astronomy camera today generates a 50 MB file for each image. That would fill one of those tapes. In a single night I'll take hundreds of those photos. Our tools give us stunning capabilities. Many people complain about $5000 for a compiler, or $2000 for some other tool. Our MDS-800 cost more than a year's salary for an engineer and was worth every penny. A handful of thousands for today's tools is pretty insignificant in comparison. I do worry that the tools are sometimes too good. We can iterate a change and be testing again in seconds. Quick iterations, absent careful thought about the problem, can kill a schedule and doom a project. That quick fix might work... but is it really correct for all possible paths through the code, for every input we can expect? But working on that primitive 8008 was a ton of fun. As were uncountable other projects over the decades. How many careers can offer a decent salary and so much fun? |

||||

| Final Failure of the Week | ||||

|

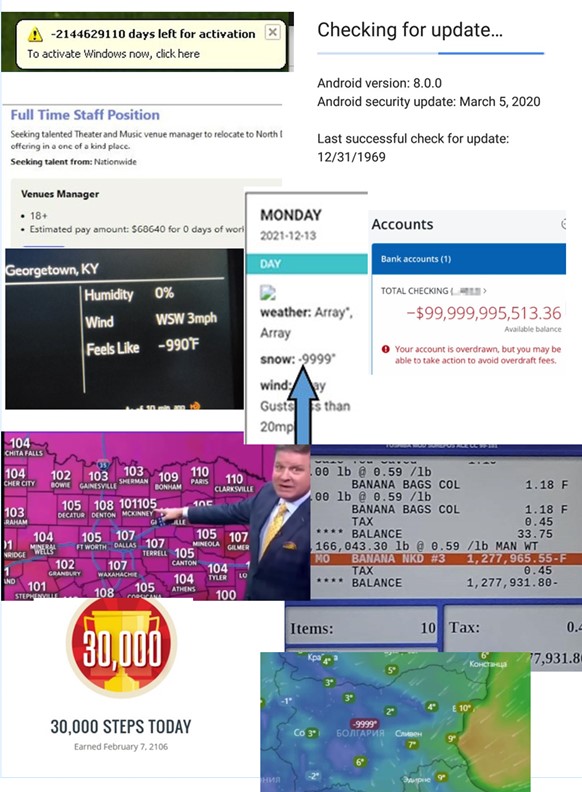

This is a medley of just a few computer failures readers have submitted over the years. They share one characteristic: the displayed results are bogus. I have hundreds of other examples. Does a $1 million drop in the price of bananas make sense? An ocean temperature of 9999 degrees? Air temp of over 100,000? Like the 737 Max problem detailed above, these failures result from shoddy programming. Assuming the results will be just fine, all the time, despite too many contrarian examples. There are two takeaways I hope we can learn: 1) Check your results! A half century ago, when CPU cycles were expensive, we were taught to check the goesintas and goesoutas. It is naive to assume everything will be perfect. 2) Critically evaluate error handlers. Exception code is exceptionally difficult to test, but is as important as the mainline stuff. Some will be impossible to test, which shows the vital importance of code inspections. |

||||

| The Last Joke For The Week | ||||

These jokes are archived here. Scientists created an AI that seemed to be able to answer all questions. It cured cancer and even told them how to travel faster than light. One day, one of the scientists asked it if there was a god. It was given all books ever written, all historical data and even nuclear clearance codes. The AI, after ingesting this information, simply said: There is now. |