| ||||

|

You may redistribute this newsletter for non-commercial purposes. For commercial use contact jack@ganssle.com. To subscribe or unsubscribe go here or drop Jack an email. |

||||

| Contents | ||||

| Editor's Notes | ||||

|

Tip for sending me email: My email filters are super aggressive and I no longer look at the spam mailbox. If you include the phrase "embedded" in the subject line your email will wend its weighty way to me. |

||||

| Quotes and Thoughts | ||||

| Software and cathedrals are much the same - first we build them, then we pray. - Samuel T. Redwine, Jr. | ||||

| Tools and Tips | ||||

|

Please submit clever ideas or thoughts about tools, techniques and resources you love or hate. Here are the tool reviews submitted in the past. Interesting article about embedded Rust from Jacob Beningo. |

||||

| Assume Nothing | ||||

|

A scorching snowy day "You will say that I am always conjuring up awful difficulties & consequences - my answer to this is it is an important part of the duty of an engineer" Robert Stephenson, the brains behind the Britannia Bridge, which opened in 1850 between Wales and the island of Anglesey. Quite a novel design, it stood for 120 years before being structurally compromised by a fire. Raising children is a humbling experience. The young parent, full of zeal, is excited by all that he can teach that formative mind. But the experience also shapes the much less malleable adult's brain. For one finds that deeply held beliefs and assumptions crumble when the tough choices of reality intervene. Very good reasons arise why that core belief is simply, in a particular case, wrong. Here's just one of many examples: I was convinced that the high school kids shouldn't own cars. Like the Amish, I felt this would disrupt family life and create even more of a barrier to communication. Then my son presented a plan to buy - with his money - two 30 year old VW microbuses with seized engines and a plethora of other problems, and build one working vehicle from the pair of wrecks. The result was exactly the opposite of what I expected. We spent our free time working together companionably for most of a year, rebuilding an engine, swapping transmissions, fixing the brakes and all of the other issues. He learned auto mechanics and a pride in ownership of the phoenix that was the product of his vision and our shared experience. We established a new, special bond despite the afflictions of teenagerhood. Parents tack and jibe, slowly learning the meta-lesson: all of our assumptions are wrong. Building embedded systems is, too, a humbling experience. Young engineers charge into the development battle armed with intelligence and hubris, often cranking out systems that may work but are brittle. Unfettered by bitter experience they make assumptions about the system and the environment it works within that, while perfectly natural in an academic cloister, don’t hold up in the gritty real world. Inputs are noisy. Crud gets into contacts. Mains power is hardly pristine. Users do crazy and illogical things. The root cause of brittle systems is making incorrect assumptions, assumptions that may be so banal and so obvious no one questions them. But question them we must. For instance, what is the likelihood the sun will rise tomorrow? Dumb question; for four billion years the probability has been 1.0. Surely it’s safe for an engineer to think that the sun will indeed appear tomorrow as it always has. Five or six eons from now it will be a burned-out cinder, but our systems will be long landfilled by then. Recently a developer told me about a product he worked on that changed the display's color scheme depending on whether it's night or day. It does a very accurate calculation of sunrise or sunset using location data. Turns out, a customer took one of the units above the arctic circle where it crashed, the algorithm unable to deal with a sun that wouldn't rise for months. The sun may not rise tomorrow. Don’t count on anything. An instrument I worked on used a large hunk of radioactive cesium-137 to measure the thickness of steel. Elaborate safety precautions included a hardware interlock to close a shutter, blocking off the beam, if the software went nuts. But at one point a defect in the hardware design caused the shutter to cycle open and closed, over and over, even though the software was correctly issuing commands to close the radiation source. It's hard to know where the invisible beam impinged, but I'm pretty sure that the VP looking down into the unit was getting a dose to his forehead. I saw him a couple of years later, his hair now snowy white. Was the gray from the radiation or his kids with their shiny new drivers' licenses? Hardware interlocks can fail or suffer from hard-to-diagnose design flaws. Some scientists believe the Ishango bone demonstrates an understanding of arithmetic back in the Upper Paleolithic era. That suggests that for 20 millennia homo Sapiens has known that 1+1=2. But only a very green developer succumbs to the utter fiction that, in the computer world, one, and another one, too, sum to two. Sure, that’s what we were taught in grade school, because young children aren't equipped to deal with the exigencies of adult life. In a perfect world, a utopia that doesn't exist, that summation is indeed correct. In an embedded system, if variables a and b are each one, a+b==2 only on a good day. It could also be 0x1ab32, or any other value, if any of a number of problems arise, such as one variable being clobbered by a reentrancy problem, or a stack getting blown. Complexity buried in our systems, even so deeply as to be invisible in the code we're examining (such as some other task running concurrently), can corrupt even seemingly obvious truths. The Y2K problem taught us only one of two very important lessons in writing code: memory is cheap, and one should never clip the leading digits of the year. (Perhaps we haven't learned even that bit of pedagogy. The Unix Y2.038K bug still looms. I'm reassured that will be long after senility likely claims me, but am equally sure that in 1970 the two-digit-year crowd adopted the same "heck, it's a long way off" viewpoint. It's interesting we rail against CEOs' short-term gain philosophy, yet practice it ourselves. In both cases the rewards are structured towards today rather than tomorrow.) Y2K did not teach us what is one of the first lessons in computer science: check your goesintas and goesoutas. If the date rolls back to a crazy number, well, there's something wrong. Toss an exception, recompute, alert the user, do something! Even without complex problems like reentrancy and the like, lots of conditions can create crazy results. Divide by zero and C will return a result. The number will be complete garbage, but, if not caught, that value will then invariably be manipulated again and again by higher-level functions. It's like replacing a variable with a random number. Perhaps deep down inside an A/D driver a bit of normalization math experiences an overflow or a divide by zero. It propagates up the call chain, at each stage being further injected, inspected, detected, infected, neglected and selected (as Arlo Guthrie would say). The completely bogus result might indicate how much morphine to push into the patient or how fast to set the cruise control. Sure, these are safety-critical applications that might (emphasis on "might") have mitigation strategies to prevent such an occurrence, though Google returns 168,000 results to the keywords "FDA recall infusion pumps." But none of us can afford to tick off the customer in this hypercompetitive world. My files are rife with photos of systems gone bad because developers didn't look for silly results. There are dozens of pictures of big digital clocks adorning banks and other buildings showing results like "505 degrees," or "-196 degrees." One would think anything over 150 or so is probably not right. And the coldest temperature ever recorded in nature on Earth is -129 degrees at the Soviet Antarctic Research Station, which is not a bad lower limit for a sanity check. Then there's the system that claimed power had been out for -470423 hours, -21 minutes, and -16 seconds. Once Yahoo showed the Nasdaq crashing -16 million percent, though - take heart! - the S&P 500 was up 19%. The Xdrive app showed -3GB of total disk space, but happily it let the user know 52GB was free. Ad-Aware complained the definitions were 730488 days out of date (Jesus was a just a lad then). While trying to find out if the snow would keep me grounded, Southwest's site indicated my flight out of Baltimore was canceled but, mirabile dictu, it would land on-time in Chicago. Parking in the city is getting expensive; I have a picture of a meter with an $8 million tab. There’s the NY Times website, always scooping other newspapers, showing a breaking story released -1283 minutes ago. A reader sent me a scan of his Fuddrucker’'s receipt: the bill was $5.49, he tendered $6.00, and the change is $5.99999999999996E-02. That's pretty darn close but completely absurd. This is a very small sample of inane results from my files of dumb computer errors. All could have been prevented if developers adopted a mind-set that their assumptions are likely wrong, chief among those the notion that everything will be perfectly fine. None of these examples endangered anyone; it's easy to dismiss them as non-problems. But one can be sure the customers were puzzled at best. Surely the subliminal takeaway is "this company is clueless." I do think we have polarized the industry into safety critical and non-safety critical camps, when another option is hugely important: mission critical. Correct receipts are mission-critical at Fuddruckers, and in the other examples I've cited "mission critical" includes not making the organization look like an utter fool. The quote at the beginning of this article is the engineer's credo: we assume things will go wrong, in varied and complex ways. We identify and justify all rosy assumptions. We do worst case analysis to find those problem areas, and improve the design so the system is robust despite inevitable problems. All engineered systems have flaws; great engineering mitigates those flaws. Software is topsy-turvy. A single error can wreak havoc. A mechanical engineer can beef up a beam to add margin; EEs use fuses and heavier wire to deal with unexpected stresses. The beam may bend without breaking; the wire might get hot but continue to function. Get one bit wrong in a program that encompasses 100 million and the system may crash. That’s 99.999999% correctness, or a thousand times better than the Five Nines requirement, and about two orders of magnitude better than Motorola’s vaunted Six Sigma initiative. I'll conclude with a quote from the final report of the board that investigated the failure of Ariane 5 fight 501. The inertial navigation system experienced an overflow (due to poor assumptions) and the exception handler (poorly engineered and tested) didn't take corrective action. "The exception was detected, but inappropriately handled because the view had been taken that software should be considered correct until it is shown to be at fault." Ah, if only the assumption that software is correct were true! |

||||

| Engineering Notebooks | ||||

|

I attended college in the mainframe days. The school had one computer for its 40,000 students, a huge dual-processor Univac 1108. Eight or ten tape drives whirled; a handful of disk drives hummed, and two drum storage units spun their six-foot-long cylinders. After 3:00 AM the machine was given over to the systems staff for maintenance of the various utilities. We'd sometimes take over the entire machine to play Space Wars; the university’s sole graphic display showed a circle representing a planet and another representing the spaceship, which the players could maneuver. Part of the game's attraction was that, with the $10 million dollars of computational power fully engaged, it could even model gravitational force in real time. There was some access to the machine via ASR-33 teletypes (properly called “teletypewriters”). But most folks submitted jobs in decks of punched cards. A box held 2000 cards - a 2000 line program. It wasn't unusual to see grad students struggling along with a stack of five or more boxes of cards holding a single program. Data, too, was on the cards. And, how one felt after dropping a stack of cards and seeing them scrambled all over the floor! A user would walk up to the counter in the computer science building with the stack of cards. The High Priest of Computing gravely intoned turn-around times, typically 24 hours. That meant the entire edit, compile, test cycle was a full day and night. Stupid errors cost days, weeks and months. But stupid errors abounded. If a FORTRAN program had more than 50 compile-time flaws the compiler printed a picture of Alfred E. Neumann, with the caption "this man never worries, but from the look of your code, you should." Something was invented to find the most egregious mistakes: playing computer. You'd get a listing and execute the code absolutely literally in your head. That technique has evolved into the modern process of inspections, which too few of us use. Instead, the typical developer quickly writes some code, goes through the build, and starts debugging. Encountering a bug he may quickly change that ">=" to a ">", rebuild, and resume testing. The tools are so good the iterations happen at light-speed, but, unfortunately, there's little incentive to really think through the implications of the change. Subtle bugs sneak in. In maneuvering vessels in orbit one slows down in order to speed up, and I think we need a similar strategy while debugging code. A bug may mean there's a lot of poorly-understood stuff going on which demands careful thought. I recommend that developers use engineering notebooks or their electronic equivalent. When you run into a bug take your hands off the keyboard and record the symptom in the notebook. Write down everything you know about it. Some debugging might be needed - single stepping, traces, and breakpoints. Log the results of each step. When you come up with a solution, don't implement it. Instead, write that down, too, and only then fix the code. The result is that instead of spending two seconds not really thinking things through, you've devoted half a minute or so to really noodling out what is going on. The odds of getting a correct fix go up. This is a feedback loop that improves our practices. But there's more! Have you ever watched (or participated in) a debugging session that lasts days or weeks? There's a bug that’s just impossible to find. We run all sorts of tests. Almost inevitably we'll forget what tests we ran and repeat work done a day or a week ago. The engineering notebook is a complete record of those tests. It gives us a more scientific way to guide our debugging efforts and speeds up the process by reminding us of what we've already done. The engineering notebook is top secret. No boss should have access to it. This is a tool designed for personal improvement only. What's your take? Do you use any sorts of tools to guide your debugging efforts (outside of normal troubleshooting tools)? |

||||

| Failure of the Week | ||||

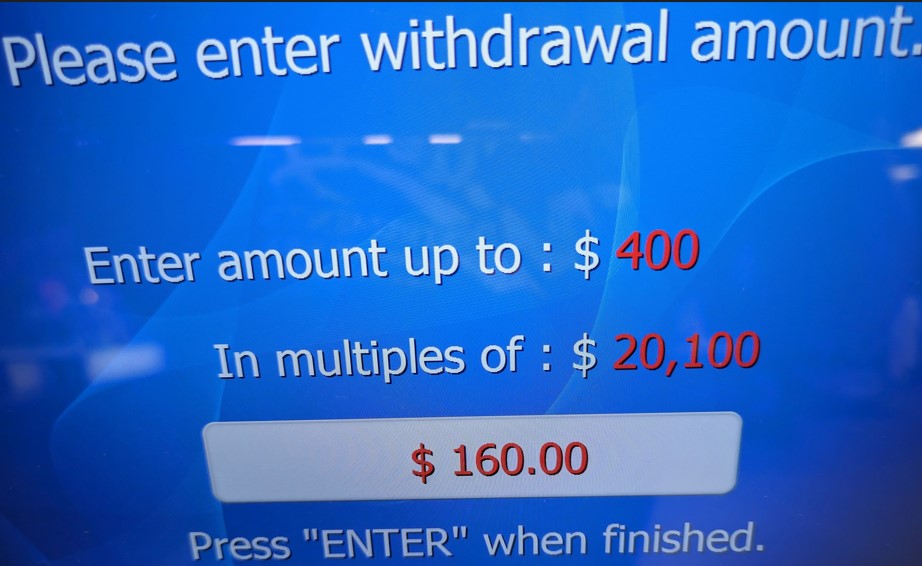

From Claude Galinsky: William Schmidt sent this and wonders if this could be helpful in trading stocks: Have you submitted a Failure of the Week? I'm getting a ton of these and yours was added to the queue. |

||||

| Jobs! | ||||

|

Let me know if you’re hiring embedded engineers. No recruiters please, and I reserve the right to edit ads to fit the format and intent of this newsletter. Please keep it to 100 words. There is no charge for a job ad. |

||||

| Joke For The Week | ||||

These jokes are archived here. From Rick Ilowite: |

||||

| About The Embedded Muse | ||||

|

The Embedded Muse is Jack Ganssle's newsletter. Send complaints, comments, and contributions to me at jack@ganssle.com. The Embedded Muse is supported by The Ganssle Group, whose mission is to help embedded folks get better products to market faster. |