|

You may redistribute this newsletter for non-commercial purposes. For commercial use contact jack@ganssle.com. To subscribe or unsubscribe go here or drop Jack an email. |

| Contents |

|

| Editor's Notes |

Tip for sending me email: My email filters are super aggressive and I no longer look at the spam mailbox. If you include the phrase "embedded muse" in the subject line your email will wend its weighty way to me.

The Embedded Online Conference is coming up in April. I'll be doing a talk about designing hardware and software for ultra-low-power systems (most everything one hears about this subject is wrong). The normal rate for people signing up in March is $190, but for Embedded Muse readers it's just $90 if you use promo code MUSE2023. The regular price goes to $290 in April, though the $100 discount will still apply.

|

| Quotes and Thoughts |

Structural engineering is the art and science of molding materials we do not fully understand into shapes we cannot precisely analyze to resist forces we cannot accurately predict, all in such a way that the society at large is given no reason to suspect the extent of our ignorance. – Unknown. |

| Tools and Tips |

Please submit clever ideas or thoughts about tools, techniques and resources you love or hate. Here are the tool reviews submitted in the past. |

| On Electromigration |

Last issue's mention of electromigration brought in some fascinating responses. Jon Fuge designs ASICs at Renishaw, and sent this:

|

We are a sole design and supplier of ASICs to our parent company for use within its metrology products; with very nearly 50 years of history (we’re 50 next month), one of the contributions to our success besides product accuracy is product reliability. Some of our products are used in very critical applications, some are used in difficult to repair locations (machine downtime of a day+ is extremely costly to business).

To contribute to our company’s reliability, we treat all ASIC designs as High reliability, guaranteeing by design 10 year continuous operation at 125C; for background, our ASICs initially used 350nm and now 180nm is our sweet spot as our ASICs are mostly analogue with just support digital circuits. Yes electromigration is an issue for old technologies.

To put is simply, electrical current flow is the movement of electrons. The higher the current, the more electrons need to flow. Electron flow will apply a force against the atoms which will move a small amount; increasing current will cause them to move more; increasing the conductor temperature will make it easier for the atoms to move. Electomigration is the “wearing out” of a conductor because of electron flow has caused enough atoms to move eventually breaking the circuit; although the final break of the circuit will be by fusing because the conductor is no longer thick enough to handle the current.

Designing for high reliability seems frightening at first if you don’t have a robust process, but some simple “best practices” can make reliable design something that just happens as part of the design process. For each foundry, technology node, technology flavour, you need to do some up-front work:

Work out your “k” factors (um/ma) for each of the conductors you use. There will be a value for peak current and RMS current. Top metal has a different thickness than lower levels so different k values for different metal levels, then you have different poly silicon conductors and diffusion conductors for higher value resistors. When designing, you need to know your currents which get multiplied by the k factor the product defines the minimum width required for reliable operation.

Don’t forget vias (metal) and contacts (for poly silicon), always at least two to give single fault tolerance, but even vias suffer from electromigration.

That gets the basic conductors in a position which can handle the long life at elevated temperatures, but that’s not the whole story.

Like us, resistors also age, sometimes not as gracefully. When simulating circuits, especially Monte Carlo analysis, make sure that you have included enough tolerance so that in 10 years, the resistance change will not affect circuit operation.

With all the above considered, you will end up with a very robust design which will meet the life requirements at high operating temperatures, it also brings advantages with very robust and very long life at normal operating temperatures with an added benefit of very high process yield, the above makes it easy to achieve a 97% first time pass rate at the limit of foundry process yields.

Of course, getting the silicon right is only the start; ensuring that your device package will also have a part to play, often packagers don’t have data to support life predictions so you will have to do that work yourself with HAST and other verification methods. Key things to avoid, avoid using dissimilar metals, i.e. don’t wirebond gold wire to aluminium pads, etc. Make sure the package material can cope with temperature and humidity!

As you state, electromigration is a complex process, but can be mitigated with some straightforward steps.

|

If you play with old electronics you're aware of the problem with capacitors degrading. Lewin Edwards also sees electromigration issues:

|

I enjoy reading your newsletter but #465 is the first that has stimulated me actually to reply directly - because it touches on a very interesting topic for collectors of vintage computers like myself, particularly Commodore - and I think there is an at least partially inaccurate Wikipedia quote in your newsletter.

The key topic for we collectors is "what storage and power-up conditions will maximize the remaining powered-up lifespan of these 40-year-old devices?" and to try to answer this question we need at least some guesses as to the likely failure modes and what triggers them.

It's well established (or at least canonical knowledge) that MOS, Commodore's in-house fab, had recipe issues throughout the years. Two examples that come to mind are:

1. The C64 PLA, which was initially a Signetics 82S100 fuse-programmable device. Commodore later cloned this design and made their own mask-programmed variant, as a cost reduction. A SUPERB deconstruction of the original PLA and Commodore's cost-reduced clone can be found here:

http://skoe.de/docs/c64-dissected/pla/c64_pla_dissected_a4ds.pdf - it is failures of this part that contributed to the C64's main reliability "wave". These failures are mostly attributed, not to electromigration, but to recipe issues with the amount of boron in the inter-layer glass.

(Commodore engineer Bil Herd, cited in that PDF, is still around and a mighty approachable guy with many fascinating stories from the era).

This device can be replaced by a 27C512 EPROM or by various third-party substitute parts, so the reliability of the original parts is more a matter of curiosity than being driven by a need to keep existing hardware working.

2. MOS/CSG (Commodore Semiconductor Group) also cloned half a dozen 74LS jellybean logic parts when dealing with some supply chain issues (known parts CSG made with their own house brand numbers are: LS257, LS258, LS139, LS08, LS04, LS02; 7708, 09, 11, 12, 13, 14 respectively are the CSG part numbers) and these are NOTORIOUSLY unreliable - and roughly coincide date-wise with the early PLA fiasco. The failure modes of these parts have not, to my knowledge, been analyzed to any exhaustive degree precisely because these are jellybeans - standard parts from other manufacturers will drop in, so there's no real reason to care about why these particular parts were so unreliable. They are considered such a timebomb that it is SOP to replace them all when troubleshooting a machine. Micron brand RAM chips when found in a C64 are similarly poorly regarded. Again, such a multi-source common part that nobody has bothered to reverse-engineer the failure mode(s) to my knowledge.

Where this starts to get interesting and relevant to the electromigration topic is with some of the slightly more recent custom parts from CSG. In particular, the later "264 series" 8-bit machines from Commodore (the only common variants being the Commodore 16 and Plus/4, though other family members do exist) use the 7501 CPU and the 7360 TED (Text Editing Device). The 7501 is very similar to the C64's 6510; it's a 6502 core with a couple of GPIOs on it. The TED is an all-in-one chip that does basically everything in the computer that isn't part of the CPU/ROM/RAM. Again, substitutes exist for the 7501, but the TED is a very special animal with no direct substitute. Although work is being done to create FPGA-based replacements, there is keen interest in preserving the remaining TEDs in working order.

This brings us back to the topic of electromigration. There is an ongoing controversy in the field as to whether it is meaningful to add heatsinks to these parts, the key question being "will it observably extend the life of the computer?" There's a significant contingent of collectors who, on acquiring a new machine, will immediately replace all electrolytic capacitors, put small passive heatsinks on the major ICs, and remove the RF shielding so the computer can be reassembled with the heatsinks in place. As you can imagine there's a lot of anecdotal talk about "I knew a guy who didn't heatsink his computer and it only lasted a month after he acquired it, whereas my machine with heatsinks has been working for a year". But electromigration is not the only possible source of failure in these devices - besides the recipe issues above, there are also considerations about thermal cycling stressing the bond wires or the die itself (or features on the die), for example. And inrush current at power-on, and (and... and...).

As the engineer who designed the 264 family, Bil has been asked questions about this many times at vintage computer presentations. He points out that these chips were designed to survive at an operating temperature of 0-70 Celsius for an appliance lifespan of perhaps two to five years at most. Well, really they were designed to be in a comfy part of the bathtub curve when the computer warranty ran out in 12 months! All these chips are now between 37-38 years old and have been both stored and powered up for an unknown length of time at an unrecorded set of ambient temperatures.

The overall picture, then, is that it's impossible to perform any meaningful test that would demonstrate whether heatsinking these devices extends their remaining lifespan or not. The only way to perform such a test would be to acquire a batch of identical, unused NOS devices and subject them to testing under various conditions. Nevertheless, the anecdotes (and discussions of boron-induced corrosion, electromigration and other time- and temperature-bound failure modes) continue to occupy the collector community and are the source of many heated arguments :) |

|

| More on Security |

My thoughts on security elicited some interesting replies. Scott Tempe wrote:

|

> Security costs money. When our

> perfectly-engineered device competes against one from China at half

> the cost with ten times the vulnerabilities, it's hard to see how we

> can be successful.

Another factor is customers don't see security unless it fails, and maybe not even then. The vulnerable Chinese product could function perfectly for years because no one has found the flaw. Or maybe someone has found a flaw, and has stolen your data, but you haven't realized it yet. To the customer those two products probably look identical, except one costs half as much as the other.

Security involves adhering to a set of requirements exactly and not doing anything else, often by adding code to make sure it doesn't do anything else! That's a very different mindset from making a product that pleases the customer with all its features and abilities.

For a good introduction to security, please see Ross Anderson's "Security Engineering". The third edition is available in print, and you can read the second edition as PDFs at https://www.cl.cam.ac.uk/~rja14/book.html

And anyone with a smattering of security-based thinking won't click on that link; they'll do their own search. :-)

|

Philip Johnson took me to task about this:

|

>Unless, that is, that they conform to some unknown "standards" that I cannot imagine being specific enough to mean much.

It surprises me that you didn't actually look into the framework recommendations. NIST has been working on them for years. Sure, there are some "excesses" in recommendations as with any organizational-level framework. Some may be too burdensome for startups but necessary for large companies. But most of the recommendations related to the software implementation should definitely be happening. A sampling off the top of my head:

- Using and verifying hashes of executables during updates

- Keep a software BOM. Actually update your dependencies when vulnerabilities are discovered and addressed.

- Have secure settings by default (and stop using default passwords!)

- Validate your system inputs

- Use proper access restrictions within your company/network/storage/etc.

- Actually test your software (e.g., fuzzing, to make sure you validate your inputs)

- Use up-to-date tools (I still cannot believe that in 2023, I run into teams who are "stuck" on GCC 4.9)

- Actually fix your software when vulnerabilities are discovered

- Develop a risk model to determine what your system's real risks are

> But I just don't see a practical way to mandate secure code

In most cases, the problems I see frequently mentioned in the news are tied to obvious low-hanging fruit: default passwords, not updating software when vulnerabilities are discovered, not updating your dependencies when they have vulnerabilities that are fixed. Sure, you cannot mandate secure code. But at the same time,we, collectively, should have mechanisms in place so we can take action if a company is shown to be repeatedly negligent.

Take the Equifax breach, for example - the one that perhaps makes me angriest of all - related to an outdated dependency, lack of network segmentation, inadequate encryption of personal data. Their website to allow you to check if you were impacted by the breach had its own negligence, including the general appearance of a phishing website and a flawed TLS implementation. Collecting data on millions of people without their consent, not taking proper cautions to protect that data, and barely a slap on the wrist in result.

I, for one, welcome mechanisms that would actually enable enforcement against negligent companies. Of course, accountability seems to be lacking up and down the chain throughout the world, so that still seems like a pipe dream. Which is an even bigger problem.

Security costs money. When our perfectly-engineered device competes against one from China at half the cost with ten times the vulnerabilities, it's hard to see how we can be successful.

I don't really have cost sympathy here. There are also costs to not having companies secure devices, and those are borne by the users and those who are impacted by the botnets that attack them. I'm not fine with saying that companies need to make money, so security is fine to throw by the wayside because it "costs too much". It is incomplete accounting. |

Steve Bresson wrote:

And this is from Vlad Z:

|

> I'm not entirely sure what this word-salad means. It appears that

> developers, or at least their organizations, will be held accountable

> for insecure code. Unless, that is, that they conform to some unknown

> "standards" that I cannot imagine being specific enough to mean much.

I was going to comment sooner, but I got Covid, and for 4 days was mostly sleeping. Now, I am in a mostly normal state and capable of irritating people with my opinion.

I think you are conflating security with safety. Safety can be tested and regulated by codes, like in construction. Security can't be. I come from a society where the government was paranoid about security, and yet I can tell you stories about casual access to the data that was supposed to be secret. The saving grace was that nobody cared about that data.

In my experience, security is a state of mind. Israel is secure because of that state of mind. In the US, from what I have seen, many people dealing with secure development do not take it seriously. To be sure, there are projects that are critical, and people are trained to deal with them, but it's not a universal attitude.

I am not sure what the "NIST Secure Software Development Framework" can be. Secure in the sense that your backups are safe? Or in the sense that an intruder cannot blow the stack? I do not think that the issue of security can be addressed at all. Open borders is a security issue, and yet it is ignored. Chinese phishing efforts are a security issue, and yet isolating corporate networks from personal use is not done, at least not in the places where I worked...

Security is the state of mind of the entire population. It cannot be legislated. IMHO.

|

|

| Speaking Truth to Power |

I believe in absolute honesty in an engineering environment. Difficult truths become much harder if left unspoken, and it’s impossible to hide from reality. An example is scheduling: in fact, the IEEE code of ethics says, among other things, that we promise to be accurate in our estimates. Even though the boss might not want to face the truth.

Les Chambers wrote a fascinating account of the Deepwater Horizon oil spill which places at least some of the blame on one manager who was unwilling to step in and accept the unpleasant truths.

Sound familiar? Do you remember Roger Boisjoly?

Mr. Boisjoly was the Morton-Thiokol engineer who warned that it was a bad idea to launch the space shuttle Challenger on a cold January day. He had the guts to stand up for what he believed. Management, under sharp pressure from NASA, overruled his objections and gave the approval to launch. Though vindicated, unfortunately, the company pulled him off space work and he was turned into a pariah. Doing the right thing is not always rewarded.

This was not a one-time bit of heroics; he had issued a memo the previous year highlighting the problem.

Unfortunately, Mr. Boisjoly passed away in January, 2012, and an entire generation has come into its own since that awful day in 1986. Many younger engineers have never heard of him.

In his article, Les Chambers goes on to describe a situation he found himself in, where management was demanding he abandon his strongly-held position about the safety of a device. Mr. Chambers refers to “speaking truth to power,” which is an old Quaker call to the bravery required when one has to say the unpleasant to powerful opponents.

To quote his article: "This was a blatant violation of everything we knew to be professional, honest and right. But how? How can you buck the momentum of a massive project proceeding inexorably to delivery. How can you do what you know is right in the face of massive cost/time pressure and political will? How can one be a righteous person in a unjust world?"

He goes on to cite Albert Hirschman, an MIT "social scientist" who gives three options to people finding themselves in these sorts of uncomfortable positions (again, quoting the article):

- Loyalty. Remain a loyal “team player” (shut up and do what you’re told)

- Voice. Try to change the policy (speak truth to power)

- Exit. Tender a principled resignation (quit).

The article explores each of these options in some detail. In engineering we’re sometimes called on to make ethical decisions, and I highly recommend Mr. Chambers’ article to help refine your thinking when caught in such a position. |

| Failure of the Week |

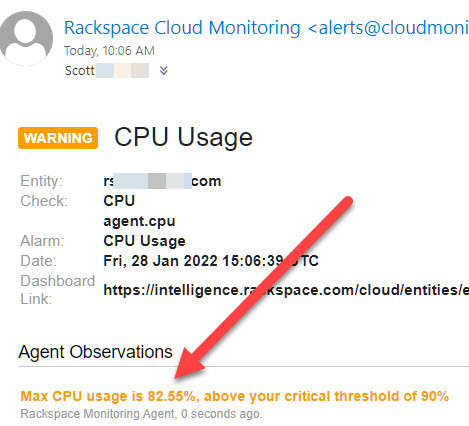

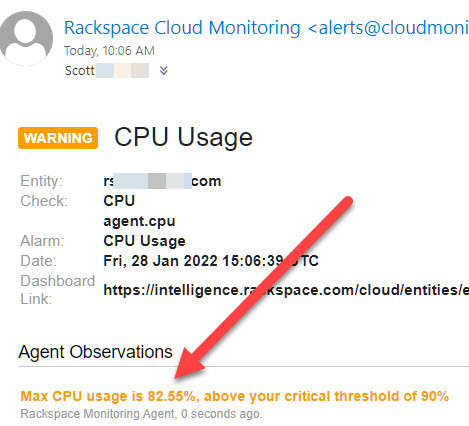

From Scott Hamilton:

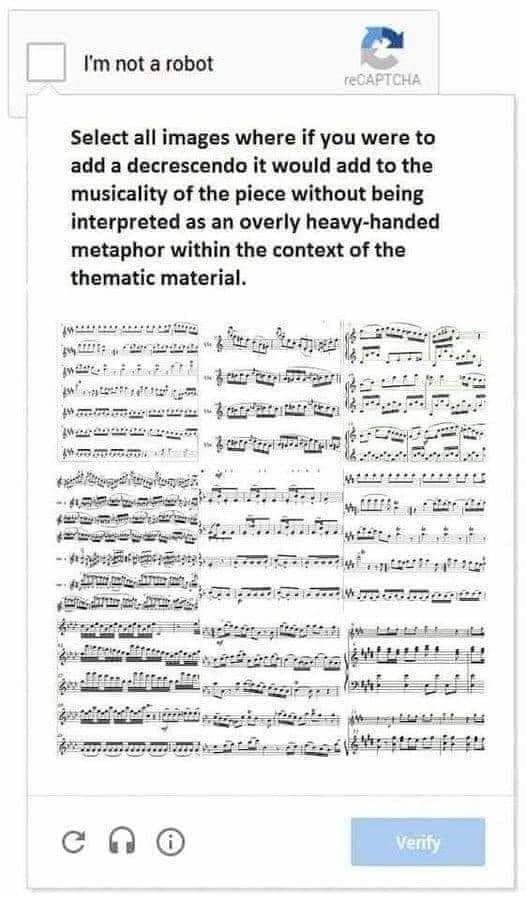

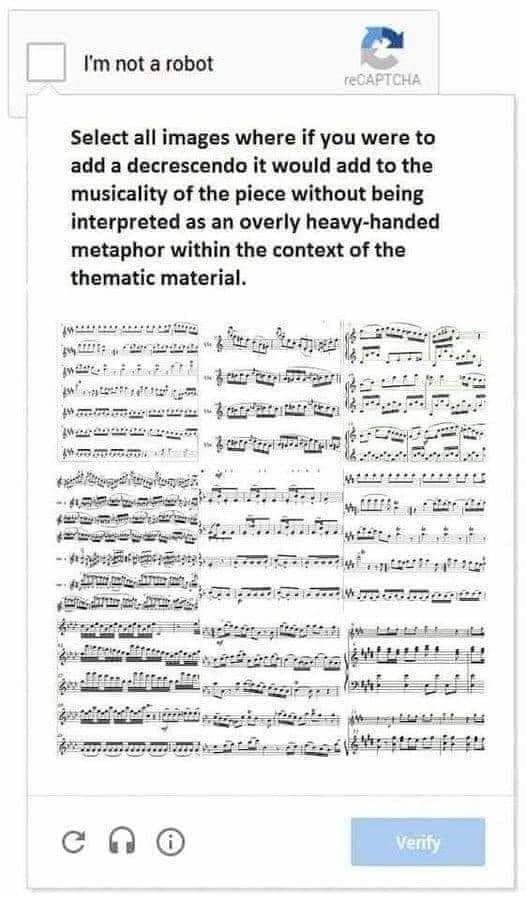

Aonghus Ó hAlmhain sent this - there's nothing like planning for the future:

Have you submitted a Failure of the Week? I'm getting a ton of these and yours was added to the queue. |

| Jobs! |

Let me know if you’re hiring embedded

engineers. No recruiters please, and I reserve the right to edit ads to fit the format and intent of this newsletter.

Please keep it to 100 words. There is no charge for a job ad.

|

| Joke For The Week |

These jokes are archived here.

|

| About The Embedded Muse |

The Embedded Muse is Jack Ganssle's newsletter. Send complaints, comments, and

contributions to me at jack@ganssle.com.

The Embedded Muse is supported by The Ganssle Group, whose mission is to help embedded folks get

better products to market faster. |