Go here to sign up for The Embedded Muse.

Go here to sign up for The Embedded Muse.

|

||||

You may redistribute this newsletter for non-commercial purposes. For commercial use contact jack@ganssle.com. To subscribe or unsubscribe go here or drop Jack an email. |

||||

| Contents | ||||

| Editor's Notes | ||||

|

Tip for sending me email: My email filters are super aggressive and I no longer look at the spam mailbox. If you include the phrase "embedded muse" in the subject line your email will wend its weighty way to me. I'm off sailing for a couple of months so may be very slow in replying to email. Memfault's Interrupt blog is always worthwhile. This entry shows how to use the open source Codechecker static code analyzer. Ingo Marks sent a link to an Ada implementation (https://www.sweetada.org/) called SweetAda. From the web site: SweetAda is a lightweight development framework whose purpose is the implementation of Ada-based software systems. The code produced by SweetAda is able to run on a wide range of machines, from ARM® embedded boards up to x86-64-class machines, as well as MIPS® machines and Virtex®/Spartan® PowerPC®/MicroBlaze® FPGAs. It could theoretically run even on System/390® IBM® mainframes (indeed it runs on the Hercules emulator). SweetAda is not an operating system, however it includes a set of both low- and high-level primitives and kernel services, like memory management, PCI bus handling, FAT mass-storage handling, which could be used as building blocks in the construction of complex software-controlled devices. |

||||

| Quotes and Thoughts | ||||

Far too often, 'software engineering' is neither engineering nor about software. Bjarne Stroustrup |

||||

| Tools and Tips | ||||

|

Please submit clever ideas or thoughts about tools, techniques and resources you love or hate. Here are the tool reviews submitted in the past. Guy Griffin recommends SpeedCrunch:

Tilak Rajesh wrote likes Lizard:

|

||||

| Ram Tests on the Fly, Redux | ||||

While many systems sport power-on self-tests, in some cases, for good reasons or bad, we're required to check RAM continuously as the system runs. Since the RAM test necessarily runs very slowly, so to not turn the CPU into a tortoise, I think that in most cases these tests are ineffective. Most likely the damage from bad RAM will be done long before the test detects a problem. But sometimes the tests are required for regulatory or marketing reasons. It makes sense to try and craft the best solution possible in the hope that the system will pick up at least some errors. In the last issue I discussed hard errors - those generally reproducible problems that stem from bad hardware. But some systems may be susceptible to transient problems. A bit might mysteriously change value, yet the memory array consistently passes standard RAM tests. Though these "soft" errors can come from a variety of sources (power fluctuations, poor electrical design, etc), aliens account for most. Aliens, that is, meaning invaders from outer space. High energy streams of protons and alpha particles that comprise cosmic rays do cause memory bits to flip unexpectedly. Then there's Maxwell's curse: Shoving electrons around in a circuit generates electromagnetic fields that can affect other parts of a system... or even other systems. Long ago I instrumented a steel rolling mill. A PDP-11 main controller lived in an insulated NEMA cabinet, but was connected to numerous custom controllers dispersed around the 1000 foot-long line. A house-sized motor consuming thousands of amps reversed direction every few seconds to move the steel back and forth under the rollers. I had argued for a fiber connection between boxes but was overruled as optical connections were still somewhat expensive at the time. The motor induced so much energy that the wiring between controllers - and a lot of electronics - quite literally smoked. The customer found money for fiber after that. But even a small system operating in a benign environment can suffer random bit flips from electromagnetic energy created from switching logic. Big memory arrays, being highly capacitive, are particularly vulnerable. Back when I was in the in-circuit emulator business we added a RAM test feature to our products. Since our customers used the units to bring up hardware as well as software, it seemed a great idea to help them evaluate their RAM. That feature turned out to be a nightmare as something like a third of the users called to complain that the test gave erratic results. Two successive checks might give a few very different errors. Sometimes this was a result of an impedance mismatch between the tool and the customers' systems, but in general we found that the targets' designs were suffering from Maxwell effects. Driving RAM created transients that wrote incorrect data. Or sometimes the memory was fine but EMF distorted, momentarily and erratically, the data latched into the processor. Those effects are most severe when a lot of bits on the bus change (since that's when the most electromagnetic energy is radiated), so it was, and still is, most common to see transients when many of the data bits are different than address bits, like reading 0000 from 0xffff. So we devised stress tests to pound on the busses hard. For instance, this snippet in x86 lingo tap-dances on the bus like King Kong on a Yugo: mov si,0xaaaa

mov di,0x5555

mov [si],0xffff

mov [di],[si] ; read ffff from aaaa

; write ffff to 5555

cmp [di],0xffff ; check result

A lot of bits change in a very short time. Poor design invokes Maxwell's curse and the final compare instruction will fail. Soft errors are just a perilous as a true hardware fault. A single-bit error in the stack, even if transient, can cause a crash or error condition. A corrupted variable may bring the application to its knees, create fun and embarrassing results, or put the system into a dangerous mode. Soft errors are transient. Conventional RAM tests won't generally detect them. Instead of looking for errors per se, a soft RAM test checks for memory consistency. Does each location contain what we expect? It's a simple matter to checksum memory and compare that to a previous sum or a known-good value. But variables change constantly in a running program. That's why they're called "variables." It's generally futile to try and track the microsecond to microsecond contents of hundreds or thousands of these, unless you have some knowledge that an important subset stays constant or within some known range of values. Sometimes it takes several machine cycles to set a variable. A 16 bit processor updating a 32 bit long will execute several machine instructions to save the data. An interrupt between those instructions leaves the variable half-changed. If your RAM test runs as a task or is invoked by a timer ISR, it may examine a variable in an indeterminate state. Be sure to preserve the reentrancy of every value that's part of the RAM test. I once saw an interesting solution to the problem of finding soft errors in a program's data space. Every access to every variable was encapsulated by a driver, which computed a new checksum for the data block after each write operation. That's simpler than re-summing memory; instead figure how the checksum much change based on the previous and new values of the item being changed. Since two values - the variable and checksum - were updated the code used semaphores to be reentrant-safe. Slow, sure, but effective. Many systems boot from relatively-slow flash, copy the code into faster RAM, and run the code from RAM. Others boot from a network into RAM. Ulysses had himself tied to a mast to resist the temptation to write self-modifying code (the Odyssey was all Greek to me), a wise move that, if emulated, makes it trivial to look for soft errors in the code. On boot checksum the executable and have the RAM test repeat that checksum, comparing the result to the initial value. Since there's never a write to firmware, don't worry about reentrancy when checksumming the program. It's reasonable to run such tests on RAM-based program code since the firmware doesn't change during execution. And the odds are good that if an error occurs, the application won't crash before the check gets around to testing the bad location, since the program likely spends much of its time stuck in loops or off handling other activities. In today's feature-rich products the failure could occur in a specific feature that's not often used. Is a CRC better than a simple checksum? After all, if a couple of bits in different locations fail it's entirely possible the checksum won't signal a problem. CRCs dominate communications technology since noisy channels can corrupt many bytes. But they're computationally much more expensive than a checksum, and one goal for RAM testing is to run through all locations relatively quickly while leaving as many CPU cycles as possible for the system's application code. And soft RAM errors are not like noisy communications; most likely a soft error will result in merely one word being wrong. CaveatsWhile marketers might like the sound of "always self-tests itself!" on a datasheet, the reality is rather grim. It's tough to do any sort of consistency check on quickly-changing locations, such as the stack. The best one can hope for is to check only sections of the memory array. Unfortunately on-the-fly RAM tests pose a Hobson's Choice with respect to performance. We want to run the tests often and fast, picking up errors before they wreak havoc. But that's computationally expensive, especially when reentrancy considerations mandate taking locks or disabling interrupts. If these tests are truly important one must push the application's code to a low priority and lavish the bulk of CPU cycles on the tests. Few of us are willing to do that. Processors with caches pose particularly-thorny problems. Any cached value will not get tested. One can glibly suggest a flush before each test, but, since the check runs often and fast, such action will more or less make the cache worthless. Dual-ported RAM or memory shared between processors must be locked during the tests. It's possible to use a separate CPU just to run the test (I've seen it done), but bus bandwidth will cripple the application processor's performance. Finally, what do you do if the test detects and error? Log the problem, leaving some debugging breadcrumbs behind. But don't continue to run the application code. Recovery, unless there's some a priori knowledge about the problem, is usually impossible. Halt and let the watchdog issue a reset or go into a safe mode. Hi Rel AppsSoft RAM errors are a reality that some systems cannot tolerate. You'd hate to lose a billion-dollar space mission from a single microsecond-long glitch. Banks might not be too keen on a system in which a cosmic ray changes variable check_amount from $1.00 to $1000001.00. Worse would be holding a press conference to explain the loss of 100 passengers because the code, though perfect, read one wrong location and "crashed." In these situations we need to mitigate, rather than test for, errors. When failure is not an option it's time to rely on additional hardware. The standard solution is to widen each RAM location to add an error correcting code (ECC). There will be a substantial amount of hardware needed to encode and decode ECCs on every memory access as well. A word of 2n bits needs n+1 additional check bits to correct for any single-bit error. That is, widen RAM by 6 bits to fix any one bit error in a 32 bit word. Use n+2 extra bits to correct any single-bit error, but to detect (and flag) two-bit errors. Note that ECC will protect any RAM error, even one that's in the stack. A few wrinkles remain. Poor physical organization of the memory array can defeat any ECC scheme. In the olden days DRAM was available only in one bit wide chips. A 16KB array used sixteen 16kb x 1 devices. Today vendors sell all sorts of wide (x 4, x 8, etc) configurations. If the ECC code is stored in the same chip as the data, a multiple-bit soft error may prevent the error correction... even detection. Proper design means separating data and codes into different parts. The good news is that cosmic rays usually only toggle a single bit. (But there are exceptions. The 1991 Oh-My-God particle had an energy of some 50 J. That's not far from the energy of a bowling ball falling a meter, yet concentrated in perhaps a single proton.) Another problem: soft errors can accumulate in memory. A proton zaps location 0x1000. The program reads that location and sends the corrected value to the CPU. But 0x1000 still has an incorrect value stored. With enough bad luck another cosmic ray may strike again; now the location has two errors, exceeding the hardware correction capability. The system crashes and Sixty Minutes is knocking at your door. Complement ECC hardware with "scrubbing" code that occasionally reads and rewrites every location in RAM. ECC will clean up the data as it goes to the processor; the rewrite fixes the bad location in memory. A minimally-intrusive background task can suck a few cycles now and then to perform the scrub. Reentrancy is an issue. Some systems invoke a DMA controller every few hours to scrub RAM. Though DMA sounds like the perfect solution it usually runs independent of the program's operation and may corrupt any non-reentrant activity going on in parallel. Unless you have an exquisite sense of how the code updates every variable, be wary of DMA in this application. ECC is expensive. Are there any software-only solutions? Sometimes developers maintain multiple copies of critical variables, encapsulating access to them through a driver that checks to ensure all are identical. That leaves the stack unprotected. The heap might be vulnerable as well, since a corrupt entry in the allocation table (invisible to the C programmer) could take out every malloc() and free(). One could preallocate every block on boot, though then the heap offers little of value over fixed arrays. Such copies may use as much extra memory as does ECC. There may be some value in protecting critical, slowly-changing, data, but my observation is that developers typically value ease of implementation over doing a real failure analysis. |

||||

| Reply to RAM Tests on the Fly | ||||

Paul Carpenter had a reply to the article in the last Muse about RAM tests:

|

||||

| On the Supply Chain Issues | ||||

Charles Manning had some comments about supply chain problems:

|

||||

| Failure of the Week | ||||

From Donavon Wright:

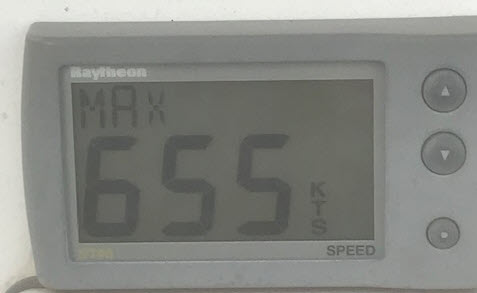

Here's another. Last week the knotmeter on my sailboat showed this:

This might be a good time to enter the America's Cup race! Have you submitted a Failure of the Week? I'm getting a ton of these and yours was added to the queue. |

||||

| Jobs! | ||||

Let me know if you’re hiring embedded engineers. No recruiters please, and I reserve the right to edit ads to fit the format and intent of this newsletter. Please keep it to 100 words. There is no charge for a job ad. |

||||

| Joke For The Week | ||||

These jokes are archived here.

|

||||

| About The Embedded Muse | ||||

The Embedded Muse is Jack Ganssle's newsletter. Send complaints, comments, and contributions to me at jack@ganssle.com. The Embedded Muse is supported by The Ganssle Group, whose mission is to help embedded folks get better products to market faster. can take now to improve firmware quality and decrease development time. |