Go here to sign up for The Embedded Muse.

Go here to sign up for The Embedded Muse.

|

||||

You may redistribute this newsletter for non-commercial purposes. For commercial use contact jack@ganssle.com. To subscribe or unsubscribe go here or drop Jack an email. |

||||

| Contents | ||||

| Editor's Notes | ||||

|

Over 400 companies and more than 7000 engineers have benefited from my Better Firmware Faster seminar held on-site, at their companies. Want to crank up your productivity and decrease shipped bugs? Spend a day with me learning how to debug your development processes. Attendees have blogged about the seminar, for example, here, here and here. VDC is currently running their 2019 survey of embedded/IoT engineers. Survey respondents will earn a $30 Amazon.com gift card or donation to Doctors Without Borders upon completion of the 20-40 minute survey. The survey link is here. |

||||

| Quotes and Thoughts | ||||

The major difference between a thing that might go wrong and a thing that cannot possibly go wrong is that when a thing that cannot possibly go wrong goes wrong, it usually turns out to be impossible to get at and repair. Douglas Adams |

||||

| Tools and Tips | ||||

|

Please submit clever ideas or thoughts about tools, techniques and resources you love or hate. Here are the tool reviews submitted in the past. Dejan Đurđenić sent this link to an article about sub-$0.10 MCUs. Stephen Bernhoeft was burned by C's modulo operator recently. He had some code that looked like this: ...

Ticks = 0; //Ensure a full 'long press' elapses

//before testing for long key press <--IN ERROR!

...

if(!(Ticks%DTICK_LONG_PRESS)) //OOOPS! This will always succeed.

{

...

(0 % N) is always zero for any value of N other than N==0. The C11 standard says little about this other than, in section 6.5.5 "If the quotient a/b is representable, the expression (a/b)*b + a%b shall equal a; otherwise, the behavior of both a/b and a%b is undefined." (C99 has exactly the same wording). (0 % N) is zero because 0/N is zero with no remainder, since N*0 == 0. Stephen's point, supported by other C programmers consulted on this, is that it's easy to get tricked by the modulo operator if one doesn't think carefully about having zero on the left side. |

||||

| Freebies and Discounts | ||||

September's giveaway is a DS213 miniature oscilloscope that I reviewed in Muse 374. About the size of an iPhone it has two analog and two digital channels, plus an AWG. At 15 MHz (advertised) it's not a high-performance instrument, but given the form factor, is really cool.

Enter via this link. |

||||

| My Top Ten Reasons for Project Failures | ||||

If David Letterman could have a Top Ten list, so can I. But instead of making fun of any of a vast number of Congressional scandals, I'll hit embedded systems. What are, in my opinion, the top ten reasons embedded projects get into trouble? 10 - Not Enough ResourcesFirmware is the most expensive thing in the universe. Ex-Lockheed CEO Norman Augustine, in his wonderful book "Augustine's Laws" wrote about how defense contractors were in a bind in the late 70s. They found themselves unable to add anything to fighter aircraft, because increasing a plane's weight means decreasing performance. Business requirements - more profits - meant that regardless of the physics of flight they had to add features. Desperate for something hideously expensive yet weightless, they found… firmware! Today firmware in high performance aircraft consumes about half the total price of the plane. A success by any standard, except perhaps for the taxpayers. Indeed, retired USAF Colonel Everest Riccioni has suggested firmware-stuffed fighter airplanes and smart missiles are now so expensive that the US is unilaterally disarming. Face it: firmware is expensive, and getting more costly as it grows. Couple that growth with the exponential relationship between schedule and size and it's pretty clear that within a few years firmware development will pretty-nearly consume the entire world's GDP. Yet software people can't get reasonable sums of money for anything but bare necessities. While our EE pals routinely cadge $50k logic analyzers we're unable to justify a few grand for a compiler. How many of you, dear readers, have been successful getting approval for quality tools like static analyzers? 9 – Starting Coding Too SoonAgile methods have shaken up the world of software development. Most value code over documents, which all too often is incorrectly seen as justification for typing "void main()" much too early. Especially in the embedded world we don't dare shortchange careful analysis. Early design decisions are often not malleable. Select an underpowered CPU or build hardware with too little memory and the project immediately headed towards disaster. Poorly structured code may never meet real-time requirements. Should the system use an RTOS? Maybe a hierarchical state machine makes the most sense. These sorts of design decisions profoundly influence the project and are tremendously expensive to change late in the project. Sometimes the boss reinforces this early-coding behavior. When he sees us already testing code in the first week of the project he sees progress. Or, what looks like progress. "Wow - did you see that the team already has the thing working? We'll be shipping six months ahead of schedule!" 8 - The use of CC is the perfect language for developers who love to spend a lot of time debugging. C is no worse than most languages. But there are a few - Ada, SPARK, and (reputedly, though I have not seen reliable data) Eiffel – that intrinsically lead to better code. Yet C and C++ dominate this industry. Let's see: they produce buggier results than some alternatives. And cost more to boot. I guess we keep using C/C++ because, uh… debugging is so much fun? I do like coding in C. It's a powerful language that's relatively easy to master. The disciplined use of C, coupled with the right tools, can lead to greatly reduced error rates. Problem is, we tend to furiously type some code into the editor and rush to start debugging. That's impossible in Ada et al, which force one to think very carefully about each line of code. It's important to augment C with resources similar to those used by SPARK and Ada developers, like static analyzers, Lint, and complexity checkers, as well as the routine use of code inspections. So you can change #8 to the misuse of C. But the original title is more fun. 7 - Bad scienceThe second type is when one stumbles onto something that's truly new, or something not widely known. Penzias and Wilson ran into this in 1965 as they tried and tried to eliminate the puzzling noise in a receiver… only to eventually find that they had discovered the cosmic microwave background radiation. I remember working on a system in the early 70s that used carbon tet as a solvent. The EPA clamped down on the use of that chemical, so we changed to perchloroethylene. Suddenly, nothing worked, and I spent weeks trying to figure out what was going on. The customer, a chemist, arrived to check on progress and I confessed to having no good data. He blithely said, "oh, perchloroethylene is always contaminated with alcohol, which is completely opaque at these wavelengths." Had I known the science, much time would have been saved. It's pretty hard to stick to a schedule when uncovering fundamental physics. But most of the time the science is known; we simply have to understand and apply that knowledge. 6 – Poorly defined processWhile there is certainly an art to developing embedded systems, that doesn't mean there's no discipline. A painter routinely cleans his brushes; a musician keeps her piano tuned. Many novelists craft a fixed number of pages per day. There's plenty of debate about process today, but no one advocates the lack of one. CMM, XP, SCRUM, PSP and dozens of others each claim to be The One True Way for certain classes of products. Pick one. Or pick three and combine best practices from each. But use a disciplined approach that meets the needs of your company and situation. There are indisputable facts we know, but all too often ignore. Inspections and design reviews are much cheaper and more effective than relying on testing alone. Unmanaged complexity leads to lots of bugs. Small functions are more correct than big ones. There's a large lore of techniques which work. Ignore them at your peril! 5 – Vague requirementsNext to the emphasis on testing, perhaps the greatest contribution the agile movement has made is to highlight the difficulty of eliciting requirements. For any reasonably-sized project it's somewhere between extremely hard to impossible to correctly discern all aspects of a system's features. But that's no excuse for shortchanging the process of developing a reasonably-complete specification. If we don't know what the system is supposed to do, we will not deliver something the customer wants. Yes, it's reasonable to develop incrementally with frequent deliverables so stakeholders can audit the application's functionality, and to continuously hold the schedule to scrutiny. Yes, inevitable changes will occur. But we must start with a pretty clear idea of where we're going. Requirements do change. We groan and complain, but such evolution is a fact of life in this business. Our goal is to satisfy the customer, so such changes are in fact a good thing. But companies will fail without a reasonable change control procedure. Accepting a modification without evaluating its impact is lousy engineering and worse business. Instead, chant: "Mr. Customer – we love you. Whatever you want is fine! But here's the cost in time and money." And work hard at pinning down the requirements. In school a 90% is an A. That's often true in life as well. An A in eliciting requirements is a lot closer to a home run than not having a clear idea of what we're building. 4 - Weak managers or team leadsManagers or team leads who don't keep their people on track sabotage projects. Letting the developers ignore standards, skip using Lint or other static analyzers is simply unacceptable. No relentless focus on quality? These are all signs the manager isn't managing. They must track code size and performance, the schedule versus current status, keep a wary eye on the progress of consultants, and much more. Management is very hard. It makes coding look easy. Perturb a system five times the same way and you'll get five identical responses. Perturb a person five times the same way and expect five very different results. Management is every bit as much of an art as is engineering. Most people shirk from confrontation, yet it's a critical tool, hopefully exercised gently, to guide straying people back on course. 3 – Inadequate testingConsidering that a few lines of nested conditionals can yield dozens of possible states it's clear just how difficult it is to create a comprehensive set of tests. Yet without great tests to prove the project's correctness we'll ship something that's rife with teeming bugs. Embedded systems defy conventional test techniques. How do you build automatic tests for a system which has buttons some human must push and an LCD someone has to watch? A small number of companies use virtualization. Some build test harnesses to simulate I/O. But any rigorous test program is expensive. Worse, testing is often left to the end of the project, which is probably running late. With management desperate to ship, what gets cut? Design a proper test program at the project's outset and update it continuously as the program evolves. Test incrementally, constantly and completely. 2 - Writing optimistic codeThe inquiry board investigating the 1996 half-billion dollar failure of Ariane 5 recommended (among other findings) that the engineers take into account that software can fail. Developers had an implicit assumption that, unlike hardware which can fail, software, once tested, is perfect. Programming is a human process subject to human imperfections. The usual tools, which are usually not used, can capture and correct all sorts of unexpected circumstances. These tools include checking pointer values. Range checking data passed to functions. Using asserts and exception handlers. Checking outputs (I have a collection of amusing pictures of embedded systems displaying insane results, like an outdoor thermometer showing 505 degrees, and a parking meter demanding $8 million in quarters).

For very good reasons of efficiency C does not check, well, pretty much anything. It's up to us to add those that are needed. My wife once asked why, when dealing with a kid problem, I look at all the possible lousy outcomes of any decision. Engineers are trained in worst case analysis, which sometimes spills over to personal issues. What can go wrong? How will we deal with that problem? 1 - Unrealistic schedulesScheduling is hard. Worse, it's a process that will always be inherently full of conflict. The boss wants the project in half the estimated time for what may be very good reasons, like shipping the product to stave off bankruptcy. Or maybe just to save money; given that firmware is so expensive it's not surprising that some want to chop the effort in half. Capricious schedules are unrealistic. All too often, though, the supposedly accurate ones we prepare are equally unrealistic. Unless we create them carefully, spending the time required to get accurate numbers, then we're doing the company a disservice. Yet there are some good reasons for seemingly-arbitrary schedule. That "show" deadline may actually have some solid business justification. A well-constructed schedule shows time required for each feature. Negotiate with the boss to subset the feature list to meet the - possibly very important - deadline. ConclusionThose are my top ten. What are yours? |

||||

| On Function Headers | ||||

A reader recently wrote asking if it's OK to skip adding comment headers to functions that are "obvious." He gave the example: int32_t Integer_Sum(int16_t a, int16_t b); I struggled to find any ambiguity in this definition and failed. It's pretty darn clear what the thing should do, and what the goesintos and goesoutas are. C's usual confusion over the size of an int has been resolved using POSIX-style typedefs. Versioning software tracks modifications. His interesting point was that adding a comment header block simply results in more work - for instance, in maintaining those comments - while adding no useful additional information. Why add comments to this very clear function definition? First, this is a rather contrived example. Few functions in the real world do so little or are so ineffably clear. I'd guess that such cases represent under a few percent of all of the code we write.

Second, never underestimate the power of developing and nurturing good habits. We always stop at red lights, even at 3 in the morning on lonely country roads. We always use our turn signals. Making exceptions starts the descent into poor habits. Pretty soon one routinely careens across 4 lanes of traffic without signaling. I'm convinced most lousy drivers are blissfully unaware of their transgressions, which result from the accumulation of years of bad habits. As professional developers we, too, must consciously reject shortcuts that will inevitably lead to similar sins. Third, what's obvious to you may be completely opaque to another developer. Fourth, without a comment header block nothing separates this function from the previous and next ones in the module. It's buried and gets lost. Header comments serve as both documentation and textual breaks. It's strange that decades after non-programmers got WYSIWYG word processors we're still shackled to ASCII text. Wouldn't it be nice to use fancy fonts, bold type, or colors to denote distinct sections of code? Finally, who decides what functions needs comments and which don't? Skip them, and you'll engage in time-consuming documentation debates with your colleagues. Though lots of very clear code exists, truly self-documenting software is about as common as unicorns.

|

||||

| This Week's Cool Product | ||||

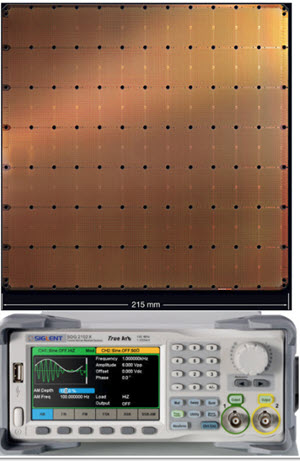

OK, this is not a product, at least not something you can buy. Cerebras has produced a monster chip for AI applications. The 46,225 mm2 die (!) consumes 15 KW (!!!). I presume that's not a misprint as there's an extensive water-cooling system. With 16 nm geometry the chip has 1.2 trillion transistors yielding 400,000 cores. The part occupies an entire wafer. Apparently the device has an extra 1% cores to deal with defects. A single percent doesn't sound like much, but that's 4000 extra cores. The site doesn't mention anything about foundry yields. A price has not been announced. One of Xilinx's FPGAs is a whopping $88,766 each. I suspect the Cerebras part will make that look like pocket change.

The wafer is an astonishing 215 mm on each side. I added a to-scale AWG to show just how enormous this monster is. Note: This section is about something I personally find cool, interesting or important and want to pass along to readers. It is not influenced by vendors. |

||||

| Jobs! | ||||

Let me know if you’re hiring embedded engineers. No recruiters please, and I reserve the right to edit ads to fit the format and intent of this newsletter. Please keep it to 100 words. There is no charge for a job ad. |

||||

| Joke For The Week | ||||

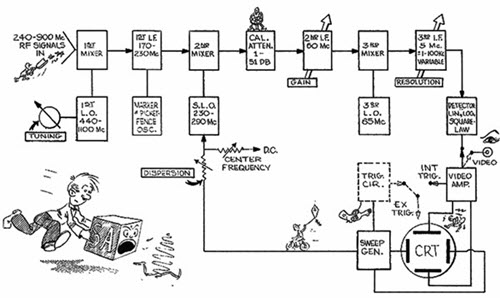

Note: These jokes are archived here. David Wright send this link to the Tektronix Museum's collection of cartoons that the company's draftsmen added to schematics and other printed materials. They're a lot of fun!

|

||||

| About The Embedded Muse | ||||

The Embedded Muse is Jack Ganssle's newsletter. Send complaints, comments, and contributions to me at jack@ganssle.com. The Embedded Muse is supported by The Ganssle Group, whose mission is to help embedded folks get better products to market faster. |

). That's perfectly fine, but the upshot was for this non-Chinese speaker I had to figure out what was going on from the code, which was extremely time-consuming. The experience drove home the importance of comments, and even more so, descriptive function headers. What does this function do? What are the arguments?

). That's perfectly fine, but the upshot was for this non-Chinese speaker I had to figure out what was going on from the code, which was extremely time-consuming. The experience drove home the importance of comments, and even more so, descriptive function headers. What does this function do? What are the arguments?