|

||||

You may redistribute this newsletter for non-commercial purposes. For commercial use contact jack@ganssle.com. To subscribe or unsubscribe go here or drop Jack an email. |

||||

| Contents | ||||

| Editor's Notes | ||||

|

Over 400 companies and more than 7000 engineers have benefited from my Better Firmware Faster seminar held on-site, at their companies. Want to crank up your productivity and decrease shipped bugs? Spend a day with me learning how to debug your development processes. Attendees have blogged about the seminar, for example, here, here and here. Jack's latest blog: Apollo 11 and Navigation. VDC is currently running their 2019 survey of embedded/IoT engineers. Survey respondents will earn a $30 Amazon.com gift card or donation to Doctors Without Borders upon completion of the 20-40 minute survey. The survey link is here. |

||||

| Quotes and Thoughts | ||||

In a sense, creating software requirements is like hiking in a gradually lifting fog. At first only the surroundings within a few feet of the path are visible, but as the fog lifts, more and more of the terrain can be seen. Capers Jones |

||||

| Tools and Tips | ||||

|

Please submit clever ideas or thoughts about tools, techniques and resources you love or hate. Here are the tool reviews submitted in the past. John Gustafson has proposed an alternative to the IEEE 754 floating point standard, which is briefly discussed in this article. He claims it offers solutions to many of 754's problems, and, intriguingly, uses a variable-length mantissa. Interesting stuff. Dimitris Tassopoulos sent this:

I dug around on the Renode site a little. This sounds somewhat like Simics, a fascinating tool that lets developers do vast amounts of testing with no hardware. If Renode does what is claimed, it could be a game-changer. It seems to run binaries targeted for an OS-less MCU on a PC, Mac or Linux box. So if you're developing code and the hardware is late (it's always late) conceivably you could start testing early. And it's free. The Embedded Systems Conference will be August 27-29 in Santa Clara. I'll be giving one talk, and Jacob Beningo and I will do a "fireside chat" about where this industry has gone over the last 30 years (or more). |

||||

| Freebies and Discounts | ||||

Uwe Schächterle won the Liteplacer pick and place machine in last month's giveaway. This month's giveaway is a slightly-used (I tested it for a review) 300 MHz 4-channel (including 16 digital channels) SDS 2304X scope from Siglent that retails for about $2500.

Enter via this link. |

||||

| The SquarerUpper | ||||

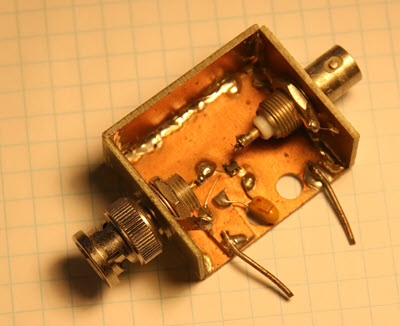

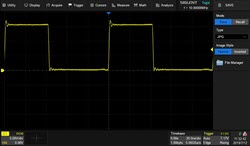

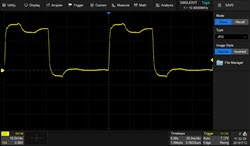

In Muse 377 I referred to my SquarerUpper tool, which improves the rise time of pulse/arbitrary-waveform generators. Many readers asked about it. Some oscilloscopes today include waveform generators, a nice feature. But rise times are slow. Most I've measured are in the 15 to 20 ns range, which is akin to what we expected from 74-series logic around 1980. Yeah, sure, I understand there's probably a DAC doing the heavy lifting and the bandwidth of those are limited, but 20 ns is pretty much the time it takes for a modern CPU to drink a beer, settle into the barcalounger, and utter that inevitable belch. My nice AWG sports an 8.4 ns risetime, still too slow for much of my work. The SquarerUpper is just a 74AUC08 AND gate. It's mounted in a "cage" of copper-clad PCB material with BNCs for the input and output, with both high and low-frequency bypass capacitors. That's it. Two leads go to a 2.7 volt lab power supply.

SquarerUppper. The 74AUC08 gate is the tiny black thing in the center. The high-frequency bypass cap is hidden under the input BNC (on the left). The two leads at the bottom are for the power supply.. I generally use coax to connect the output of this to the device under test; if that's a scope, set it's input impedance to 50 ohms to match the cable. Otherwise the reflections will distort the signal. On the left, the scope's input is 50Ω; on the right it's 1 MΩ |

||||

| On Testing | ||||

How much testing do we need? If completely undisciplined software engineering techniques were used to design commercial aircraft avionics (e.g., giving a million monkeys a text editor), then you'd hope there would be an army of testers for each developer. Or consider security. Test is probably the worst way to ensure a system is bullet-proof. Important? Sure. But no system will be secure unless that attribute is designed in. The quality revolution taught us that you cannot test quality into a product. In fact, tests usually exercise only half the code! (With test coverage that number can be greatly improved). Capers Jones and Olivier Bonsignour, in The Economics of Software Quality (2012) show that many kinds of testing are needed for reliable products. On large projects up to 16 different forms of testing are sometimes used. Jones and Bonsignour provide some useful data (Jones probably has more empirical data about software engineering than anyone). It is all given in function points, as Jones especially is known for espousing them over lines of code (LOC). But function points are a metric few practitioners use or understand. We do know that in C, one function point represents very roughly 120 LOC, so despite the imprecision of that metric, I've translated their function point results to LOC. They have found that, on average, companies create 55 test cases per function point. That is, companies typically create almost one test per two lines of C. The average test case takes 35 minutes to write and 15 to run. Another 84 minutes are consumed fixing bugs and re-running the tests. Most tests won't find problems; that 84 minutes is the average including those tests that run successfully. The authors emphasize that the data has a high standard deviation so we should be cautious in doing much math, but a little is instructive. One test for every two lines of code consumes 35 + 15 + 84 minutes. Let's call it an hour's work per line of code. That's a hard-to-believe number but, according to the authors, represents companies doing extensive, multi-layered, testing. Most data shows the average developer writes 200 to 300 LOC of production code per month. No one believes this as we're all superprogrammers. But you may be surprised! I see a ton of people working on very complex products that no one completely understands, and they may squeak out just a little code each month. Or, they're consumed with bug fixes, which effectively generates no new code at all. Others crank massive amounts of code in a short time but then all but stop, spending months in maintenance, support, requirements analysis for new products, design, or any of a number of non-coding activities. One hour of test per LOC means two testers (160 hours/month each) are needed for the developer creating about 300 LOC/month. One test per two LOC is another number that seems unlikely, but a little math shows it isn't an outrageous figure. One version of the Linux kernel I've analyzed averages 17.6 statements per function, with an average cyclomatic complexity of 4.7. Since complexity is the minimum number of tests needed, at least one test is needed per four lines of code. Maybe a lot more; complexity doesn't give an upper bound. So one per two lines of code could be right, and is probably not off by very much. Jones' and Bonsignour's data is skewed towards large companies on large projects. Smaller efforts may see different results. They do note that judicious use of static analysis and code inspections greatly changes the results, since these two techniques, used together, and used effectively, can eliminate 97% of all defects pre-test. But they admit that few of their clients exercise much discipline with the methods. If 97% of the defects were simply not there, that 84 minutes of rework drops to 2.5 and well over half the testing effort goes away. (Here's another way to play with the numbers. The average embedded project removes 95% of all defects before shipping. Using static analysis and inspections effectively means one could completely skip testing and still have higher quality than the average organization! I don't advocate this, of course, since we should aspire to extremely high quality levels. But it does make you think. And, though the authors say that static analysis and inspections can eliminate 97% of defects, that's a higher number than I have seen.) Test is no panacea. But it's a critical part of generating good code. It's best to view quality as a series of filters: each activity removes some percentage of the defects. Inspections, compiler warnings, static analysis, lint, test and all of the other steps we use are bug filters. Jones' and Bonsignour's results are fascinating, but like so much empirical software data one has to be wary of assigning too much weight to any single result. It's best to think of it like an impressionistic painting that gives a suggestion of the underlying reality, rather than as hard science. Still, their metrics give us some data to work from, and data is sorely needed in this industry. |

||||

| Inspections or Static Analysis? | ||||

Dan Swiger writes:

I have a couple of reactions: First, let's define static analyzers, of which Coverity is one. Lots of people mix these up with Lint and the like. Lint does extensive syntax checking; static analyzers do not. They look for problems that can arise at runtime, like the ones noted by Dan. Code inspections, done correctly, have two goals. The first is to find design issues: does this code do what we want it to? The second is to, well, essentially scare the developer into working hard to avoid errors in the first place. The quality movement that revolutionized manufacturing taught us that retrofitting fixes into a product is a lousy idea. Much better is to consistently build high-quality stuff from the git-go. That saves money and delights the customer. Analogously, In the software world it has been shown that a focus on fixing bugs will not give you quality code. Quality needs to be designed in from the outset. Static analyzers excel at finding potential runtime errors. They're an excellent addition to the quiver of quality tools. But they won't find design errors, like "will this code do what we want it to do?" Or, "what happens if this exceptional condition occurs?" "Scare the developer into working hard to avoid errors in the first place" means that any normal person will try harder to create high-quality work products if he knows he'll be accountable to his peers. More time spent in implementation, carefully checking for problems, means better code, fewer bugs, less debugging, and ultimately faster delivery. Isn't that what we want? |

||||

| A Feature I'd Like in a Scope | ||||

Modern oscilloscopes are remarkable instruments, simply packed with features we couldn't have imagined in the pre-digital days. Today some are more like PCs with high-speed data acquisition front-ends. I was running an experiment recently, measuring the rotation speed of a device over time. The rotating element had a tab that broke the beam of an optical switch; a scope monitored that signal to show the period of that signal. From that it was easy to compute RPM. I was using Siglent's SDS5034X which can plot histograms and trend lines of signals. That turns the scope into a data recorder, which is a very cool feature:

This shows the scope graphing a varying-frequency oscillator. But then it occurred to me that this scope, and many other digital versions, can compute functions like integrals and square roots of signals. Wouldn't it be nice if one could have the instrument compute a custom function? In the case I mentioned, it would be nice to display RPM, not period. If one could enter a polynomial all sorts of custom calculations would be possible. No matter how many features we're given, there's always one more! I'm reminded of someone who asked Rockefeller how much money was enough. His answer: "Just a little more!" Very late update: Steve Barfield of Siglent tells me they will be adding this feature to some of their scopes, probably in September. |

||||

| Jobs! | ||||

Let me know if you’re hiring embedded engineers. No recruiters please, and I reserve the right to edit ads to fit the format and intent of this newsletter. Please keep it to 100 words. There is no charge for a job ad. |

||||

| Joke For The Week | ||||

Note: These jokes are archived here. This is from Bruce Schneier's July 15 CRYPTO_GRAM. I presume it's true and not a joke, except, well, you have to be kidding me: If you need to reset the software in your GE smart light bulb -- firmware version 2.8 or later -- just follow these easy instructions: Start with your bulb off for at least 5 seconds.

The bulb will flash on and off 3 times if it has been successfully reset. |

||||

| About The Embedded Muse | ||||

The Embedded Muse is Jack Ganssle's newsletter. Send complaints, comments, and contributions to me at jack@ganssle.com. The Embedded Muse is supported by The Ganssle Group, whose mission is to help embedded folks get better products to market faster. |