|

||||

You may redistribute this newsletter for non-commercial purposes. For commercial use contact jack@ganssle.com. To subscribe or unsubscribe go here or drop Jack an email. |

||||

| Contents | ||||

| Editor's Notes | ||||

|

Engineering: what a remarkable word! Engineering is about building a better world. In my lifetime we've gone from rotary dial phones where calls were expensive to international calls at the drop of a dime. From hulking mainframes accessible by very few, to multi-GHz processors in every pocket. From being Earth-bound to probes rocketing past Pluto. From Pluto being a planet, to a diminished planetoid! But engineering changes at an accelerating pace. The boss wants more bug-free code, today. Tomorrow he'll want even more in the same time-frame. Are you on top of the best ways to deliver it? Every time I do a public version of my Better Firmware Faster class some percentage of registered attendees cancels at the last minute, citing a project in peril that needs their attention now. I'm reminded of this cartoon:

It is important to fight today's engineering battle. But the war will be lost if you don't find better ways to win. This is what my one-day Better Firmware Faster seminar is all about: giving your team the tools they need to operate at a measurably world-class level, producing code with far fewer bugs in less time. It's fast-paced, fun, and uniquely covers the issues faced by embedded developers. Public Seminars: I'll be presenting a public version of my Better Firmware Faster seminar outside of Boston on October 22, and Seattle October 29. There's more info here. Or email me personally. On-site Seminars: Have a dozen or more engineers? Bring this seminar to your facility. More info here. Latest blog: What I learned about consulting. |

||||

| Quotes and Thoughts | ||||

"I like to think of system engineering as being fundamentally concerned with minimizing, in a complex artifact, unintended interactions between elements desired to be separate." - Michael Griffin |

||||

| Tools and Tips | ||||

|

Please submit clever ideas or thoughts about tools, techniques and resources you love or hate. Here are the tool reviews submitted in the past. Heath Raftery responded to some comments in the last Muse about variables vs. #defines:

Dave Hansen added:

A lot of readers responded to the article by Bob Snyder about frustration with a device going into some crazy mode, and the difficulty of returning to a known state. Some suggested that instead of returning to the factory-fresh configuration, it might be better to return to that which you, perhaps laboriously, had set up for your needs. Others pointed out that many oscilloscopes have a "get out of jail free" button which looks at the signals and picks modes it thinks makes sense. But far more common were stories of dealing with elderly relatives. Well, in the USA the government claims 60 is "elderly," so really we're talking seniors in their 80s and 90s. My folks are both over 90 and require a fair amount of tech support. Once, when my dad's computer lost Internet access, yet again, I asked if he had changed any cables. He said "no," but my mom, standing behind him vigorously nodded "yes." |

||||

| Freebies and Discounts | ||||

David Cuddihy won the power supply in last month's giveaway. This month we're giving away an Owon oscilloscope. I hope to review it in the next issue of the Muse.

The contest closes at the end of October, 2018. Enter via this link. |

||||

| Fixed-Point Math | ||||

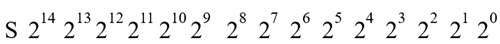

In firmware we have two fundamental kinds of math: integer and floating point. Integer is very fast, but can't express fractional numbers, nor can it handle those with large dynamic ranges. For a 32 bit machine integer can't handle a number more than a few billion. Floating point offers a huge dynamic range. Single precision (32 bit) IEEE-754 numbers can be as large as 10^38. And, of course, fractions are no problem. One downside is that there's quite a bit of computation in handling floats which can be costly in terms of memory usage and speed. Happily, more MCUs today, especially in the Cortex-M world, come with floating-point hardware. But there is an alternative. Fixed-point math offers greater dynamic range than integers, fractions, and much faster operations than with software-implemented floating point. Downsides: the range is generally not as good as floats, nor is the precision. Fixed-point is particularly common in the DSP world where speed is the overriding concern. Today, many DSP applications are migrating to MCUs as the latter sometimes offer DSP-like instructions, and clock rates have climbed to where an MCU can give some DSPs a run for their money. A 16-bit signed integer looks like this:

Do you see the binary point? It's there but is all the way to the right where it can't be seen or used. In fixed point we shift the binary point to the left. For instance, here's an unsigned fixed point representation of a number where the binary point is between positions 24 and 23:

(Does this look arbitrary? It is! The epistemology of numbers is all about what choice we make to represent them. IEEE-754, too, is about as arbitrary as it gets, but forms a useful way of of expressing numbers.) What does this mean? Let's suppose the number stored is 10110100001000 in binary. Printed in decimal as an integer that's 11528. But, in fixed point with the binary point as shown, it's 720.5. Since the binary point is in the 24 position, 11528 x 2-4= 720.5. In fixed point, you can place the binary point anywhere you'd like. The tradeoff is how large of a number you want to express (move the binary point to the right and you can express larger numbers) vs how much of the fractional part you want (move to the left for a more precise fraction). The magic of fixed point is that the math is pretty painless and fast. If two numbers have the binary point in the same position, addition and subtraction is just basically doing an integer addition or subtraction. Multiplication and division are similar, with the addition of some shifting and range checking. Plenty of canned code exists so I won't dig into that here, but here are some references:

You'll often see "Q" notation used with fixed-point numbers. This indicates the number of fractional bits. For instance:

GCC, via an extension, does support fixed-point math. Here's a practical guide to using it: So, here are a couple of important points:

|

||||

| The Cost of Tools | ||||

"Why are you pounding that nail with a brick?" "Hammers cost too much." Silly, right? What about this: "We're looking for a cheap (IDE/debugger/static analyzer/etc.); can you help?" Equally silly, in my opinion, yet I many such requests. Why is the only adjective describing tools "cheap?" What about "reliable?" Or "proven"? I can think of a hundred critical technical qualifiers, yet all pale, it seems, compared to "cheap." The embedded tools business is tiny. Most tool vendors are tiny. Few report sales. IAR, a major IDE vendor, does - they had $44m in net sales last year. A few are bigger but I imagine one could count the number of $100m vendors on one hand. When I was in the in-circuit emulator business, we found that bosses objected to spending thousands on debugging tools. "Just don't make any mistakes!" they'd rage when confronted with a $8k purchase request. "Think of the money we'll save!" But the numbers are stark: C developers average a 5 to 10% error rate, after the compiler finds syntax errors. Even a smallish project can reasonably expect to deal with gobs of bugs. A tool that saves even a few minutes on each pays for itself many times over. Debugging typically consumes about half the schedule. A tool that shaves a mere 10% from that is priceless. Yet vendors of static analyzers and other tools that automatically find bugs have a hard time getting management to sign checks. The hardware world is different. Check out this week's cool product (later in this issue). I hardly ever hear: "We need a really cheap 2 GHz scope. Any ideas?" Yet those tools, too, are primarily used to find an engineer's design errors. But you can slap a property tag on a scope; it's harder to do that with a software tool. We are in a golden age of tools. Software products have incredible amounts of functionality. Scopes, logic analyzers and the like can be had for very reasonable prices. But too many teams are stuck with substandard equipment that saps productivity. |

||||

| Hardware/Firmware Development in the Olden Days | ||||

Steve Evanczuk has a nice article about how firmware engineering has changed over the last 20 years, which complements Max Maxfield's piece about hardware changes over those two decades. My 2018 salary survey revealed that the world-wide average age of embedded developers is 42 years. So, the two decades in those articles span the entire career of the median-aged engineer. A friend and I have been reflecting about this industry recently. As certified old farts we both got started with embedded systems nearly at the dawn of the industry. Neither of us ever used the first commercial microprocessor, the 4004, but we came into engineering with its successor, the 8008. But there was an embedded industry, of a sort, prior to the microprocessor. It wasn't unusual for companies to "embed" a minicomputer into a high-priced product or a research lab, though the word "embedded" wouldn't be used till the 80s. Minis were comparatively cheap in an era of $10m mainframes, and engineers found that adding a computer offered a lot of benefits. Like many companies, we used Data General Nova minis in several products prior to microprocessors. At about $25k (today's dollars) without memory they were a lot cheaper than DEC's wonderful PDP-11. Neotec, the company where Scott and I worked, bought a lot of them. The following ridiculous picture shows us at work with one such instrument containing an embedded Nova. I think this was taken by one of our girlfriends when they both came by at 3:00 one morning with a surprise dinner:

(We're both a lot grayer now!) The device is a spectrophotometer that Neotec made. Only a portion is visible; it was a sizable unit. The Nova is the black box with lights in the upper right; to the left are a pair of tape drives. The white panel contains controls and electronics. The yellow display is a Tektronix 4010 graphics display that was ungodly expensive and pitifully slow. But any sort of graphics was amazing at that time. Development on the Nova meant using either paper tape or the mag tapes shown. Everything was in assembly language and Fortran. To compile or assemble a program those tapes ran forwards and backwards, forward and backwards, it seemed hundreds of times. I wondered why abrasion from the iron on the tape didn't wear out the heads. Much later we got a disk drive, 5 MB on a 14" removable platter (Scott tells me it weighed 150 pounds but my memory is hazy). 5 MB seemed an unlimited ocean of storage. But even after that point, Data General continued distributing tools and such on paper tape in plastic trays.

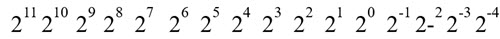

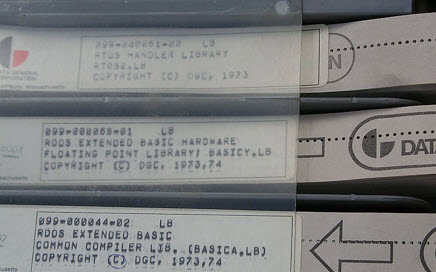

A tape distribution from Data General When the 8008, the first commercial 8-bit microprocessor came out Neotec hired a consultant to write code for another instrument. That was in PL/M, a derivative of PL/1. The consultant created his code on paper tape using an ASR-33 TTY.

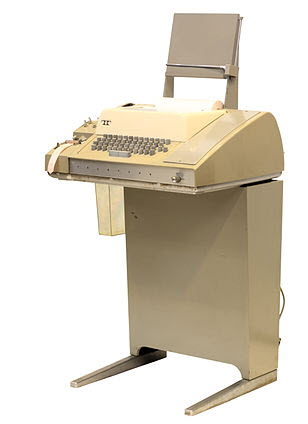

An ASR-33 TTY For those not familiar with these noisy beasts, while they did speak ASCII there was no lower case. The paper tape reader could manage a blistering 10 characters per second. Once the consultant manually punched his program onto paper tape he connected the TTY over a 110 baud modem to a time-shared mainframe and compiled the code. The result was a binary tape he could load into the micro's memory. On another front, the company had been building mostly-analog grain analyzers that used an in-house-developed spectrophotometer to sample 800 wavelengths of near-IR light reflected from the sample to compute the grain's protein and oil levels. With the advent of the 8008 it was soon clear that a new version controlled by a micro was needed. Steve's and Max's articles do a nice job talking about development 20 years ago. Four-plus decades ago things were quite different. I'll describe the process we used which mirrored what many in industry were doing. The development system was an Intel Intellec 8, which was priced at about $15k in today's dollars.

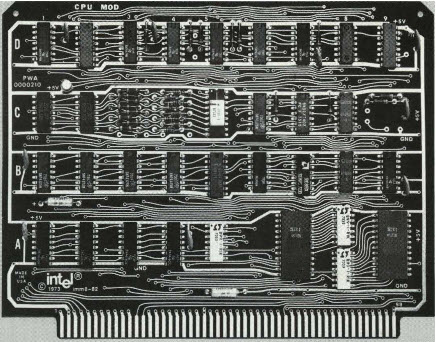

An Intellec 8 Why was there a key on the thing? I guess so no one could turn it off during a days-long build. The machine had a motherboard with 16 100-pin sockets for 8" x 6.28" boards. The CPU board looked like this:

CPU board Note that it was only a two-layer board. That was pretty much universal in those days, and it wasn't unusual to find some wires added where the PCB designers couldn't get a track in place. Also note the lack of power and ground planes. With a 12.5 us cycle time speeds were not a problem. The 8008 is the 18-pin shiny chip near the center. (All PCBs were laid out by hand on sheets of mylar, usually at twice actual size. We bought patterns of pads for ICs that were glued to the mylar. Then the engineer would lay out tracks using black tape. It was quite a puzzle: with the very first track one put down one had to think ahead about where the others would go, so the board was routable. It could take weeks to lay out a complex board. We'd take the finished sheet to a local shop with a room-sized camera to get a 1:1 version on film, which went to the PCB vendor.) There were no JTAG debuggers or even in-circuit emulators back then. Our instrument had a motherboard exactly like the Intellec's. We made two boards, one for the Intellec and one for the instrument, that just brought the busses to a ribbon cable. That cable connected the two motherboards. We'd remove the target CPU and memory boards and let the Intellec view the instrument as a bunch of peripherals on its bus. Booting the Intellec was irksome. Using the switches on the front panel one would enter each instruction for an elementary loader in binary. Then you'd start the loader which would read a smarter loader from the TTY's paper tape. Later we got a ROM loader in the machine which hugely simplified the process. You'd key in a JMP instruction to ROM via the switches. Nearly half a century later I still remember that JMP instruction, having done this so many times: 01000100 00000000 00000001. The 8008 needed three separate voltages: +5, -9, and +12, plus a two-phase clock input. It had a 7 word-deep stack built into the silicon (i.e., there was no accessible stack pointer). Issue more than 7 calls or pushes without corresponding returns and pops, and the code crashed. Finding code that pushed just once too many times was a nightmare, as there was nothing like a trace capability. Interrupts, which were central to our application, only made the problem worse. Development was tedious, though we reveled in it at the time. After getting the loader running you'd read the editor in over the 10 CPS paper tape reader. That was fast, taking maybe half an hour. Type in your code (and there was no control-S to save anything) and then punch a tape of the source. One time the editor hiccuped and punched the entire source "file" backwards, starting with the last character and ending with the first. I had to retype the whole thing. Just like now, we used separate modules, so you'd repeat this effort for each, getting one tape per module. Then, load the assembler, another half hour or so. And this is where things got dicey: you'd load a source tape. Three times; it was, after all, a three-pass assembler. Often the TTY would misread a tape, maybe just one of the punched holes, and the assembler would give up, printing "phase error." That meant starting all over. When luck prevailed, the tool would punch an object tape. After assembling each module, time to load the linker. Feed the object tapes in - twice, as I recall - and if it was a sunny day and you had said your prayers that morning the linker would, at long last, punch a binary. That 8008 instrument's executable was just 4 KB. It took three days, starting with good source tapes, to assemble and link them. Sometimes we had to patch the paper tape by hand, filling in or adding holes as needed. Part of the program was a 32-bit floating-point library that did the four basic functions. Written by one Cal Ohne (Scott recalls the name as Cal Ohm), it amazingly fit into 1 KB. I was never able to learn anything about Cal (this was long before Google), but always marveled at this routine. All our code's calculations had to be in floating point, so his package was greatly appreciated. (In those days Intel had a software-sharing service. Engineers would submit code and Intel disseminated it to customers via snail mail. Cal's code was in that library.) Oh, and that 4 KB program? Once we got the system working it was burned into EPROMs. Sixteen of them. For the only EPROM that existed then, the 1702A, could store a whopping 256 bytes. My memory is a bit shaky on this, but when Intel was the sole source for the 1702A I believe they cost around $100 each, which is roughly $600 today. Times sixteen chips for 4 KB of storage. And we used nearly every last byte. (We had to build our own EPROM programmer as Intel's didn't work reliably; after months of back-and forth with them they adopted our programming algorithm.) EPROMs were erased under an intense UV lamp. That was my first run-in with OSHA (the US's federal safety agency). The light leaked out and was visible to us. Turns out, UV coagulates the proteins in the eye. Now we had a binary tape. A "monitor" program was, as I recall, in ROM and had a command for loading that tape. Then what? Why, you'd start the program. Which would always crash or otherwise fail, as "software engineering" hadn't come to the nascent and inchoate embedded industry, and "hope and hack" were the skills of the day. The monitor did have some rudimentary debugging capabilities: it could display and change memory and/or the registers in hex and set up to two breakpoints. Other commands would load and punch tape and other housekeeping chores, but debugging capabilities were minimal. We'd find a problem and insert a binary patch. When there wasn't room to patch right on top of the offending code we'd stuff in a JMP to some unused memory, fill that with the right binary, and JMP back. It was critical to carefully mark up the listings with the patches so a later re-edit could correct the sources. These patches we done by entering instructions in hex (via the monitor over the TTY) or binary (via the front-panel switches). Intel provided nice pocket reference cards containing all of the hex codes for the instructions, but after a while we knew pretty much all by heart. Due to the three-day rebuild time we fixed the source tapes around once a month. Every night we'd punch the current binary image from RAM and reload that in the morning. And we'd punch more binaries throughout the day as crashes sometimes obliterated RAM. Later, we added built-in calibration code that used an iterative multiple linear regression. If after 20 minutes the regression wouldn't converge the code stopped and displayed "HELP" on the instrument's 7-segment LEDs. We sold a lot of those products, but they became obsolete. Some years went by when a white-faced technician came into my office. One had failed in the field and he was fixing it. He told me "I don't know what I did, but the device is blinking HELP HELP at me!" That diagnostic had been long-forgotten. As Steve and Max point out in their articles, this field is ever moving on, and in 1975 we built an entirely new version with an 8080 CPU. This part was introduced at $360 each ($1700 today). That was almost half a buck (today's dollars) per each transistor in the thing. Today you can buy a 32 MCU with gobs of memory and I/O for the price of one of those transistors. For development systems we bought two MITS Altair machines. The Altair revolutionized computing as it was offered, without memory, at $400 in kit form, not much more than the price of the CPU chip. Thousands were sold, and a couple of teenagers made a few bucks when they created a BASIC for it, launching Microsoft. But those Altairs were awful machines. Admittedly, we did gets some of the early units and no doubt quality improved later, but with two we could barely keep one running. The DRAM cards (all RAM was in this machine was DRAM) were poorly designed and unreliable. In fact, DRAM poisoned itself! After months of work, the memory vendor discovered that the chip packaging materials emitted alpha particles that flipped stored bits. For a time, we used the same sort of development strategy as for the 8008: we coupled the instrument's bus to the Altair's. Upgrading to a high-speed paper tape reader (though still hobbled by the TTY's slow punch) helped. But the Altairs' poor reliability hampered our efforts. At this time Intel came out with their MDS-800 "blue box." Neotec was cash-strapped but somehow my boss acquiesced to my incessant pleadings and we bought one, for $20k, or about $100k today. The pressure at that company was inhuman, and he responded poorly, stamping around the building and yelling a lot... and then, one day after a mysterious week away, he mellowed to the point of near somnambulance. We later found his shrink had medicated him which greatly improved our lives. I think it was during one of these periods I got the go-ahead to order the thing, which says something about the value of drugs. I can't find a copyright-free image of an MDS-800 that matches my memory, but it was big. Really big. And it had twin 8" floppy disk drives. Each disk could hold around 80 KB but compared to paper tape this was a gigantic leap ahead in storage size and speed. Assembling code meant listening to the heads saw back and forth over the disks for some minutes, reminding us of our earlier experiences with the Nova tape drives. The MDS came with a new OS called, of all things in these troubled times, ISIS. We did get the optional in-circuit emulator (ICE). Now development meant removing the 8080 from the target and plugging the MDS/ICE connector into the microprocessor's socket. No, there was no trace or any of the features we take for granted today, but the MDS's capabilities greatly enhanced our hardware and firmware engineering. Ironically, in the mid-80s I started an ICE company. And even more ironically, we started in this field in the pre-ICE days... and ICEs are long obsolete today. Well, this has gone on long enough, and I'm only up to the middle 70s. I hope the glimpse back gives you a sense of how far we've come over the course of a single career. Where will we be in another nearly half century? I can't imagine. Oh, and those Nova minis? We kept embedding them into products until the late 70s. It took a number of years until microprocessors could match their performance. The idea that micros abruptly replaced minis just isn't so. In fact, Scott and I, at this point working for ourselves, embedded a PDP-11 into a product in the early 80s, though it was coupled to a number of 8-bit Z80s. (Thanks to Scott Rosenthal for his help with my creaky memory. I'm sure there's an alpha emitter nearby!) |

||||

| This Week's Cool Product | ||||

I hope to wake up Christmas morning and find this under the tree:

This is Keysight's new UXR1104A Infiniium UXR-Series Oscilloscope. The most capable model has a 110 GHz bandwidth and samples at up to 256 GSa/s - on each of four channels! It comes with up to 2 GSa/channel of memory... which means that will fill in 10 ms. Fastest sweep rate: 1 ps/div. The rated typical jitter is better than 20 fs. Think about this: electrons in a wire move about 0.1 mm in 1 ps. At 42 kg you'll need help moving it around, but better be sure Brinks has some armed guards there. Keysight tells me pricing starts at $170k. According to this teardown video (which is a bit breathless) the top-end model is merely $1.3m. That video is quite interesting if a bit overlong (51 minutes). But it gives you the sense of the incredible amount of engineering that went into this product. Very cool, and, Santa, I've been a pretty good boy this year! Note: This section is about something I personally find cool, interesting or important and want to pass along to readers. It is not influenced by vendors. |

||||

| Jobs! | ||||

Let me know if you’re hiring embedded

engineers. No recruiters please, and I reserve the right to edit ads to fit the format and intent of this newsletter.

Please keep it to 100 words. There is no charge for a job ad. |

||||

| Joke For The Week | ||||

Note: These jokes are archived here. How to program in C:

|

||||

| Advertise With Us | ||||

Advertise in The Embedded Muse! Over 28,000 embedded developers get this twice-monthly publication. . |

||||

| About The Embedded Muse | ||||

The Embedded Muse is Jack Ganssle's newsletter. Send complaints, comments, and contributions to me at jack@ganssle.com. The Embedded Muse is supported by The Ganssle Group, whose mission is to help embedded folks get better products to market faster. |