|

|

||||

You may redistribute this newsletter for noncommercial purposes. For commercial use contact jack@ganssle.com. To subscribe or unsubscribe go to https://www.ganssle.com/tem-subunsub.html or drop Jack an email. |

||||

| Contents | ||||

| Editor's Notes | ||||

Court testimony about the recent Toyota ruling makes for interesting - and depressing - reading. A lot of code was involved and some of it was safety critical. Yet it seems the firmware was poorly engineered. No doubt the typical mad rush to get to market meant shortcuts that, at the time, were probably seen to save money. A billion dollars later (with many cases still pending) that judgment looks more foolish than sage. After over 40 years in this field I've learned that "shortcuts make for long delays" (an aphorism attributed to J.R.R Tolkien). The data is stark: doing software right means fewer bugs and earlier deliveries. Adopt best practices and your code will be better and cheaper. This is the entire thesis of the quality movement, which revolutionized manufacturing but has somehow largely missed software engineering. Studies have even shown that safety-critical code need be no more expensive than the usual stuff if the right processes are followed. This is what my one-day Better Firmware Faster seminar is all about: giving your team the tools they need to operate at a measurably world-class level, producing code with far fewer bugs in less time. It's fast-paced, fun, and uniquely covers the issues faced by embedded developers. Information here shows how your team can benefit by having this seminar presented at your facility. |

||||

| Quotes and Thoughts | ||||

"Passwords - Use them like a toothbrush. Change them often and don't share them with friends.'' -Clifford Stoll |

||||

| Tools and Tips | ||||

Please submit clever ideas or thoughts about tools, techniques and resources you love or hate. Here are the tool reviews submitted in the past. Ray Keefe also likes the Rigol DS1102E oscilloscope:

Fabien Le Mentec has a nice piece about using the Beaglebone PRU:

Portuguese speakers may find this site, out of Brazil, interesting. Sistemas Embarcados means "embedded system," which the site is all about. |

||||

| Spectrum Analyzers | ||||

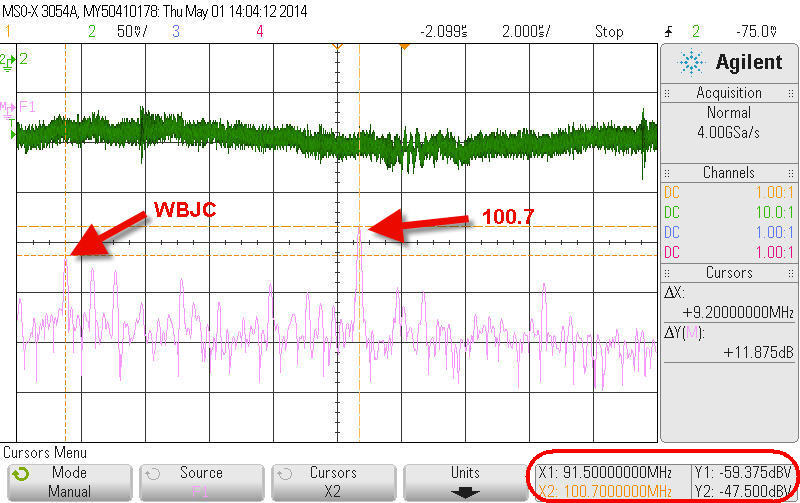

In the last Muse I provided a link to a (recommended) Agilent application note about spectrum analyzers, and noted that you'd need some background in radio theory to understand it. Many people had questions about that, and some asked for an explanation. First, when describing the picture I ran of a scope's FFT I mislabeled the signal levels. It's WBJC at -59 dBV, and the station at 100.7 MHz is stronger at-47 dBV:

The purpose of a spectrum analyzer (SA) is to show the amplitude of a signal in the frequency domain. The upper trace in the picture is that of the signal from an antenna in the time domain - that is, time is the horizontal axis. That's what oscilloscopes do: they show amplitude in volts vs time. The bottom trace is the same signal in the frequency domain: the horizontal axis is frequency. It shows the commercial FM band, from 90 to 110 MHz. The two labeled peaks are FM radio stations. I used a scope doing a fast Fourier transform (FFT) to get the lower trace; SAs get the same sort of data in a completely different way. SAs are expensive; why not just use a scope and FFT? The instruments have very different specifications. For instance, a decent spectrum analyzer will have a displayed average noise level (DANL) many orders of magnitude lower than any scope can achieve. This means the device can sample extremely weak signals. Where it's tough to see a 1 mV signal on a scope, a decent SA will give meaningful results on sub-microvolt signals, though SAs normally use dBm rather than volts. To understand how an SA achieves its magic one must understand how superhet radios work. Don't worry - even for non-hardware people, this should be pretty easy to understand! (The following is simplified, but there are many excellent works about radio theory). Edwin Armstrong was one of the most prolific and interesting early electronic engineers, and in 1918 he invented the superheterodyne receiver, also called "superhet" for short. To this day this is the most common type of receiver. It gets around the limitations of the crystal set and those of other 1918-era radios.

Block diagram of a superhet radio, from Wikipedia. The superhet has an amplifier that boosts the antenna's signal. Not a lot; at this point the signal is a jumble of noise from all sorts of transmitters. But then the magic of heterodyning takes place. A mixer takes both the RF signal and a sine wave from a local oscillator and "mixes" the two. The result is both the sum and the difference of the two inputs. Suppose you'd like to listen to a station broadcasting on 100 MHz. If the local oscillator is producing an 80 MHz sine wave, then the mixer would output peaks at 180 MHz and 20 MHz (plus other signals we're not interested in). That is, your station, inside the radio, is now at those two frequencies. A filter selects one of those images. Since they are so far apart that it's relatively easy to separate them. Generally the radio uses the mixer's lower frequency; the one passing through the filter is called the intermediate frequency (IF). Most radios today repeat the process, mixing the first IF to a lower second IF, and sometimes even more stages are used. Each stage allows sharper filtering, and thus better station selectivity. And, since the bandwidth of the signal being passed through the filters is so narrow, enormous amounts of gain are possible, increasing the radio's sensitivity. A demodulator (AKA detector) extracts audio, which is amplified and fed to speakers. One way to implement a radio is to use filters at fixed frequencies. To tune to a different station you change the local oscillator's frequency. This approach can greatly simplify the electronics since the filters don't need to be tunable. A spectrum analyzer is, to a first approximation, just a superhet radio with a local oscillator that sweeps from a low to a high frequency. Generally it does this very quickly. As the oscillator sweeps the display shows amplitude at each frequency. Consider the vast difference from an oscilloscope! The scope's front end is basically just an amplifier feeding an A/D converter. It's sucking in everything it can with no selectivity. Internally a scope looks pretty much like any bit of electronics. Take apart a spectrum analyzer and it looks like plumbers gone wild, with waveguides and heavily-shielded boards. Today, SAs are fabulously complex and often combine heterodyning and FFTs. The front end, though, remains radio-like with a swept local oscillator, though that may be divided into bands. I mentioned that SA's generally display amplitude in dBm instead of volts. A dB is ten times the log of a power ratio:

A 3 dB drop means the signal is half of what it was. Actually, a drop by half is 3.01 dB down, since that's log(0.5), but everyone rounds it off to 3. Bandwidth, like the bandwidth of a scope, is often specified at the 3 dB point, which is really important to understand. A 100 MHz scope may, for a 100 MHz signal, show half of the signal that's really being probed. When using dBs with voltages the formula is 20, not 10, times the ratio. That's because power is V2/R, and the log of V2 is 2log(V). dBs are unitless, but sometimes we want units. A dBm is one dB referenced to 1 mW; p2 in the equation is 0.001 Watt. 1 milliwatt is 0 dBm; 1000 mW (1W) is 30 dBm, and one million Watts is 90 dBm. With SAs we're often working with very low-level signals so they have negative levels. -30 dBm is one uW. dBs and dBms may seem a bit hard to grok at first, but with a bit of practice become a natural way to think about amplitudes. It's a lot easier to write "-30 dBm" than count the zeroes in 0.001 Watt. So there you have it -almost 100 years ago Armstrong revolutionized radios with the superheterodyne receiver, which is also the basis of the spectrum analyzer. Software defined radios aim to connect the antenna directly to the A/D, perhaps with a bit of amplification first, but at this point that process can't reach the performance of the century-old superhet. |

||||

| Reflections on a Career | ||||

Harley Burton, a long-time Muse reader, retired recently. He sent the story of his career, which I found illuminating:

So I asked Harley what advice he'd give to a young engineer:

I think another bit of advice lies in his stories of disruptions and layoffs: save money. With money in the bank one has options. |

||||

| Rate-Monotonic Scheduling | ||||

Normal preemptive multitasking is not deterministic. At any point a perfect storm of interrupts and task demands can cause a system to miss a deadline. In a hard-real-time system, this may cause catastrophic failure. One well-known solution is rate-monotonic scheduling (RMS, it also goes by the name rate-monotonic analysis). With RMS one gives the highest priority to the tasks that run most often. Given the execution time and frequency of each task, with a bit of simple math it's possible to figure if RMS scheduling will be deterministic. That is, one can prove that the system will always meet deadlines. Phil Koopman's May 12 blog posting is a must-read about RMS. Though often pushed as "the" solution to determinism woes, I am somewhat troubled by RMS. It fails without quantitative knowledge of both the worst-case execution time (WCET) and period for each task. Period is problematic when tasks are initiated by external events (which is an argument for a design where that does not happen). WCET can only be determined ex-post facto - after the code is written. One does a careful design, tedious implementation, and only then, late in the game, do we know if our system will be reliable. WCET is especially tough because it may vary hugely depending on inputs. Floating point execution times can be all over the map depending on the arguments. How can one be sure that the tests elicited the worst of the worst case timings? Then there are the inevitable changes. Marketing asks for some feature change. To ensure that the slightly-modified system is still schedulable, one has to re-measure all of the WCETs. Not impossible, of course, but a huge time sink that will, in the shipping frenzy, be neglected more often than not. Phil agreed that these measurements are required, and stressed how important this is for safety-critical systems. It's just the cost of doing business in that space. True, but where does that leave non-safety-critical devices, which make up the bulk of the market? Phil is a big proponent of adding run-time code that monitors system performance. Perhaps an API could be defined where each task checks in on entry and exit; the OS or other component then monitors timing. That argues for even more slack in the CPU's loading. And I'd want the ability to get a report about observed timings, so one could see how close the system is to failing. What's your take? How many of you are using RMS, and if not, what do you do? |

||||

| Jobs! | ||||

Let me know if you’re hiring embedded engineers. No recruiters please, and I reserve the right to edit ads to fit the format and intents of this newsletter. Please keep it to 100 words.

|

||||

| Joke For The Week | ||||

Note: These jokes are archived at www.ganssle.com/jokes.htm. Steve Leibson sent a link to a programmer's t-shirt that I sure want: http://dashburst.com/humor/programmer-definition/ |

||||

| Advertise With Us | ||||

Advertise in The Embedded Muse! Over 23,000 embedded developers get this twice-monthly publication. . |

||||

| About The Embedded Muse | ||||

The Embedded Muse is Jack Ganssle's newsletter. Send complaints, comments, and contributions to me at jack@ganssle.com. The Embedded Muse is supported by The Ganssle Group, whose mission is to help embedded folks get better products to market faster. |