Automatically Debugging Firmware

By Jack Ganssle

Major rewrite: May, 2014

Initial release: February, 2007

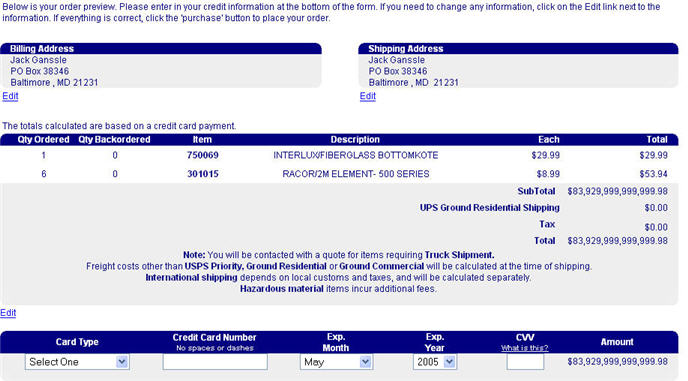

It's hard to remember life before the web. Standing in lines to buy stuff and mail-ordering from out-of-date print catalogs were frustrations everyone accepted. But today many of those problems are long gone, replaced by sophisticated software that accepts orders on-line. For instance, the figure below shows the result of an order I tried to place with Defender in 2006. $29.99 plus $53.94 equals almost $84 billion?

I was tempted to complete the transaction to see my credit card burst into flames.

How did we manage in the pre-computer age?

At least they didn't charge tax, though it would be nearly enough to pay off the national debt.

Many decades ago, as a student in a college Fortran class, the instructors admonished us to check our results. Test the goesintas and goesoutas in every subroutine. Clearly, Defender's clever programmers never got this lesson. And, in most of the embedded code I read today few of us get it either. We assume the results will be perfect.

But they're often not. Airane 5's maiden launch in 1996 failed spectacularly, at the cost of half a billion dollars, due to a minor software error: an overflow when converting a 64 bit float to a 16 bit integer. The code inexplicably did not do any sort of range checking on a few critical variables.

| Suggestion: Subscribe to my free newsletter which often covers firmware debugging issues. |

Every function and object is saddled with baggage, assumptions we make about arguments and the state of the system. Those assumptions range from the ontological nature of computers (the power is on) to legal variable ranges to subtle dependency issues (e.g., interrupts off). Violate one of these assumptions and Bad Things will happen.

Why should anything be right? Isn't it possible that through some subtle error propagated through layers of inscrutable computation a negative number gets passed to the square root approximation? If one reuses a function written long ago by some dearly departed developer it's all-too-easy to mistakenly issue the call with an incorrect argument, or to make some equally erroneous assumption about the range of values that can be returned.

Consider:

float solve_post(float a, float b, float c){

float result;

result=(-b + sqrt(b*b -4*a*c))/2*a;

return result;}

...which computes the positive root of a quadratic equation. I compiled it and a driver under Visual Studio, with the results shown below.

The code runs happily even as it computes an impossible result, as is C's wont. The division by zero that results when "a" is zero creates neither an exception nor a signal to the user that something is seriously, maybe even dangerously, amiss. What's worse is that this bogus result will almost certainly be untested and passed up the call chain, where it's used by other calculations. Eventually, some code will use this to figure how many gallons of morphine to push into the patient.

Bertrand Meyer thinks languages should include explicit constructs that make it easy to validate the hidden and obvious assumptions that form a background fabric to our software. His must-read Object-Oriented Software Construction (at 1200 pages a massive tome which is sometimes pedantic but is brilliantly written) describes Eiffel, which supports Design By Contract (DBC, and a trademark of Eiffel Software). This work is the best extant book about DBC.

In important human affairs, from marriage to divorce to business relationships, we use contracts to clearly specify each party's obligations. The response "I do" to "Do you promise to love and to hold . . ." is a contract, and agreement between the parties about mutual expectations. Break a provision of that or any other contract and lawyers will take action to mediate the dispute . . . . or to at least enrich themselves. Meyer believes software components need the same sort of formal and binding specification of relationships.

DBC asks us to explicitly specify these contractual relationships. The compiler will generate additional code to check them at runtime. That immediately rules the technique out for small, resource-constrained systems. But today, since a lot of embedded systems run with quite a bit of processor and memory headroom, it's not unreasonable to pay a small penalty to get significant benefits. If one is squeezed for resources, DBC is one of the last things to remove. What's the point of getting the wrong answer quickly?

And those benefits are substantial:

- The code is more reliable since arguments and assumptions are clearly specified in writing.

- They're checked every time the function is called so errors can't creep in.

- It's easier to reuse code since the contracts clearly specify behaviors; one doesn't have to reverse engineer the code.

- The contracts are a form of documentation that always stays in-sync with the code itself. Any sort of documentation drift between the contracts and the code will immediately cause an error.

- It's easier to write tests since the contracts specify boundary conditions.

- Function designs are clearer, since the contracts state, very clearly, the obligations of the relationship between caller and callee.

- Debugging is easier since any contractual violation immediately tosses an exception, rather than propagating an error through many nesting levels.

- Maintenance is easier since the developers, who may not be intimately familiar with all of the routines, get a clear view of the limitations of the code.

- Peace of mind. If anyone misuses a routine, they'll know about it immediately.

Software engineering is topsy-turvey. In all other engineering disciplines it's easy to add something to increase operating margins. Beef up a strut to take unanticipated loads or add a weak link to control and safely manage failure. Use a larger resistor to handle more watts or a fuse to keep a surge from destroying the system. But in software a single bit error can cause the system to completely fail. DBC is like adding fuses to the code. The system is going to crash; it's better to do so early and seed debugging breadcrumbs than fail in a manner that no one can subsequently understand.

Assertions as Contracts

Alas, there are no contracts in C. In a previous version of this paper I suggested using a free preprocessor to implement them. Fact is, no one does. How can we get the benefits of contracts using standard C?

Use the assert() macro. It's part of standard C and is supported by every compiler, yet it is rarely used in embedded systems. I searched 15 million lines of firmware from various clients and found exactly zero instances of assert() in the code.

assert() does nothing if its argument is TRUE. If FALSE, assert() printfs its filename and line number, which is generally pretty useless as so many embedded systems can't do a printf. The code, supplied with the compiler, generally looks like this:

#define assert(e) \

((void) ((e) ? 0 : __assert (#e, __FILE__, __LINE__)))

#define __assert(e, file, line) \

((void)printf ("%s:%u: failed assertion `%s'\n", file, line, e), abort())

It's trivial to modify this to do something appropriate for your application. That could be as simple as storing breadcrumbs (the source of the assertion) in a register and entering an infinite loop; make a practice of setting a breakpoint on that loop. If memory is flush you can log errors or take other action.

Let's take a quick look at the benefits of the liberal use of assertions/

Studies show that the more assertions are used, the lower the number of shipped bugs:

Other research (L. Briand, Labiche, Y., Sun, H., "Investigating the Use of Analysis Contracts to Support Fault Isolation in Object Oriented Code", Proceedings of International Symposium on Software Testing and Analysis, pp. 70-80, 2002.) shows that not only is the code better, it's cheaper. The following graph shows the cost to find and fix a bug; green bugs were found by asserts, blue by testing:

Faster and cheaper. And yet, asserts are rare in firmware. That's puzzling and disturbing.

Where should we use assertions? First, they are not for detecting erroneous inputs. For instance, you wouldn't use one to flag a typo from a keyboard. Use asserts to detect real bugs. What we want to do is stop the program, now, as soon as the error occurred and before an incorrect result is propagated up the call chain. Fail fast.

Check things that seem obvious. Though any idiot knows that you can't pull from an empty queue, that idiot may be you months down the road when, in a panic to ship, that last minute change indeed does assume a "get()" is always OK.

If you don't know what a function should do in an abnormal situation, explicitly exclude that condition using an assert.

Put asserts on things that seem obvious, as months or years later a change in the code or an unexpected hardware modification may create error conditions. If the scale's hardware simply can't weigh anything over 100 grams . . . include one to ensure that assumption never changes.

If a switch statement's default clause can never be taken, that's a great place for an assert().

Looking back at the quadratic equation solver, there are at least three ways it can fail. Here it is, properly instrumented:

/* Find the positive solution of a quadratic */

float solve_pos(float a, float b, float c){

float result;

assert (a != 0);

assert ((b*b - 4*a*c) >=0);

result= (-b + sqrt(b*b - 4*a*c))/2*a;

assert (isfinite(result));

return result;}

The triptych of asserts traps the three ways that this, essentially one line of code, can fail. Isn't that astonishing? A single line possibly teeming with mistakes. Yet the code is correct. Problems stem from using it incorrectly, the very issue assert prevents. So rather than "failure" think of the assertions as provisions that define and enforce the relationship between the function and the caller. In this case, the caller may not supply zero for a, to avoid a divide by zero. If (b*b-4*a*c) is negative sqrt() will fail. And a very small a could overflow the capacity of a floating point number.

Computing (b*b-4*a*c) twice looks computationally expensive. It may not be. I compiled this with GCC and found it computed once, and the result was pushed on the stack for use later. Even on a little ARM Cortex-M CPU that assertion will cost a microsecond or so.

Side Effects

There is one huge problem with the assert() macro. It can cause side effects. Since it essentially goes away when the debug switch is turned off, if its argument uses the assignment operator, does a function call, or takes some other action that causes a change in the program's behavior, then the program will run differently depending on the definition of NDEBUG. A better solution is to define your own assert:

#define assert_e(a) ((void)(a), assert(a))

Couple the above definition with the use of PC-Lint (you do use Lint, don't you? It's an essential part of any development environment) to flag an assertion that uses assignments or function calls.

Using Gimpel's Lint (www.gimpel.com) add this command to the code:

//lint -emacro(730,assert_e)

Now, Lint will be happy with an assertion like:

assert_e(n >=0);

But will give warning 666 ("expression with side effects) for assertions like:

assert_e(m=n);

or

assert_e(g(m));

Conclusion

Southwest's flight status page

A recent February's snowstorms generated a flurry of flight cancellations. This figure shows that, though cancelled, my flight to Chicago was still expected to arrive on time. Clearly, we programmers continue to labor under the illusion that, once a little testing is done, the code is perfect.

eXtreme Programming, Test-Driven Development, and other agile methods stress the importance of writing test code early. Using lots of assertions complements that idea, adding built-in tests to ensure we don't violate design assumptions.

I started this report mentioning Eiffel, a language that includes a complete DBC mechanism. Yet Eiffel has only a microscopic presence in the embedded space. SPARK, a subset of Ada, is an intriguing alternative that includes pre-compile-time contract checking. That's not a misprint; it has an extremely expressive contract language that transcends the contracts in Eiffel. SPARK's analysis tools examine the code before compilation, flagging any state the code can enter which will break a contract. That's a fundamentally different approach to the run-time checking of contracts, and to debugging in general. If you carefully define the contracts and fix any flaws detected by the tools, the SPARK tools can guarantee your program free from large classes of common defects, including all undefined and implementation-dependent behaviors, data-flow errors and so-called "run-time errors" like buffer overflow, arithmetic overflow and division by zero.

Finally, Dan Sacks wrote a very interesting article about building compile-time assertions - again, constructs that catch some classes of errors while you compile, without the use of external tools. The approach is not nearly as comprehensive as DBC, but it is a fascinating way to use the preprocessor to stomp out bugs early.