The complete index to reviews of embedded books is here.

Agile!, Bertrand Meyer

Bertrand Meyer is one of the most literate authors in computer science. His latest work, Agile!, is an example. It's a 170 page summary and critique of the leading agile methods. The introduction gives his theme: "The first presentations of structured programming, object technology, and design patterns... were enthusiastically promoting new ideas, but did not ignore the rules of rational discourse. With agile methods you are asked to kneel down and start praying." The book burns with contempt for the eXtreme attitudes of many of agile's leading proponents. He asks us to spurn the "proof by anecdote" so many agile books use as a substitute for even a degraded form of the scientific method.

This is a delightful, fun, witty and sometimes snarky book. The cover's image is a metaphor for the word "agile": a graceful ballet dancer leaping through the air. He contrasts that with the agile manifesto poster: "The sight of a half-dozen middle-aged, jeans-clad, potbellied gentlemen turning their generous behinds toward us..."!

He quotes Kent Beck's statement "Software development is full of the waste of overproduction, [such as] requirements documents that rapidly go obsolete." Meyer could have, and should have, made a similar statement about software going obsolete, and that both software and requirements suffer from entropy, so require a constant infusion of maintenance effort. But he writes "The resulting charge against requirements, however, is largely hitting at a strawman. No serious software engineering text advocates freezing requirements at the beginning. The requirements document is just one of the artifacts of software development, along with code modules and regression tests (for many agilists, the only artifacts worthy of consideration) but also documentation, architecture descriptions, development plans, test plans, and schedules. In other words, requirements are software. Like other components of the software, requirements should be treated as an asset; and like all of them, they can change".

He describes the top seven rhetorical traps used by many agile proponents. One is unverified claims. But then he falls into his own trap by saying "refactored junk is still junk."

The book's subtitle is "The good, the hype, and the ugly," and he uses this to parse many agile beliefs. Meyer goes further and adds the "new," with plenty of paragraphs describing why many of these beliefs are actually very old aspects of software engineering. I don't see why that matters. If agile authors coopt old ideas they are merely standing on the shoulders of giants, which is how progress is always made (note that last clause is an unverified claim!).

The book is not a smackdown of agile methods. It's a pretty-carefully reasoned critique of the individual and collective practices. He does an excellent job highlighting those of the practices he feels has advanced the state of the art of software engineering, while in a generally fair way showing that some of the other ideas are examples of the emperor having no clothes. For instance, he heaps praise on daily stand up meetings (which Meyer admits are not always practical, say in a distributed team), Scrum's instance on closing the window on changes during a sprint, one month sprints, and measuring a project's velocity. (I, too, like the agile way of measuring progress but hate the word "velocity" in this context. Words have meaning. Velocity is a vector of speed and direction, and in agile "velocity" is used, incorrectly, to mean speed).

One chapter is a summary of each of the most common agile methods, but the space devoted to each is so minimal that those not familiar with each approach will learn little.

Agile! concludes with a chapter listing practices that are "bad and ugly," like the deprecation of up-front tasks (e.g., careful requirements gathering), "the hyped," like pair programming ("hyped beyond reason"), "the good," and "the brilliant." Examples of the latter include short iterations, continuous integration and the focus on test.

The book is sure to infuriate some. Too many people treat agile methods as religion, and any debate is treated as heresy. Many approaches to software engineering have been tried over the last 60 years and many discarded. Most, though, contributed some enduring ideas that have advanced our body of knowledge. I suspect that a decade or two hence the best parts of agile will be subsumed into how we develop code, with new as-yet-unimagined concepts grafted on.

I agree with most of what Meyer writes. Many of the practices are brilliant. Some are daft, at least in the context of writing embedded firmware. In other domains like web design perhaps XP is the Only Approach To Use. Unless TDD is the One True Answer. Or Scrum. Or FDD. Or Crystal...

Agile Estimating and Planning Mike Cohn

In the technical arena I greatly enjoyed 'Agile Estimating and Planning,' by Mike Cohn. Mike knows how to write in an engaging matter on subjects that are inherently rather dry.

This book is of value for anyone building software, whether working in an agile environment or a more traditional plan-driven shop. Possibly the main agile thrust of the book is Mike''s insistence on re-estimating frequently, whenever there''s new information. But wise managers should stay on top of schedules which always evolve, no matter what approach is used to build the system. Re-estimation might not be common, but bad estimates are.

He does use a lot of agile terminology like 'story points,' and he makes good arguments for decomposing a system into features or components of features. If your outfit is more plan-driven everything he says still applies.

Though Mike never uses the word 'Delphi' his approach is awfully close to the method the Rand Corporation invented, and that has been very successful on software projects (for more, see http://www.processimpact.com/articles/delphi.html).

A key concept is that of 'velocity,' or keeping track of the rate of software development. That gets fed back into the evolving schedule to converge on a realistic date relatively early. Of course, velocity fails when the team accepts scope changes without doing a re-estimate. Agile developers use short iterations and frequent deliveries to elicit these changes early; many projects just can''t do that and so should employ a change control procedure that tells the stakeholders the impact of a revised requirement. There''s nothing on change control in the book, which is probably appropriate but a shame.

The book has no real-world data. There are plenty of wise words telling the reader how to make the right measurements in his project, but sample velocity and other sorts of metrics from real projects would be helpful to establish a range of benchmarks.

The chapter on making decisions based on financial impact to the company assumes one can quantitatively predict inherently hard-to-determine factors, like 'add feature X and we''ll get 50 new customers.'

Mike recommends adding a buffer, and tries to distinguish this from schedule padding. Yet the boss may find it hard to make the distinction and will likely remove it. Buffers/padding/2x factors are all wonderful but are a poor substitute for a proper estimate.

Like many technical books this paints a picture that''s incomplete, but it contains so much wisdom, and is such an easy read that I recommend it highly. You may or may not be able to apply the techniques'- but you''ll come away thinking deeply about better ways to create and manage a realistic schedule

The Art of Readable Code, Dustin Boswell and Trevor Foucher

In Muse 272 Paul Bennett mentioned the book The Art of Readable Code by Dustin Boswell and Trevor Foucher. I ordered a copy and found the book very readable. Fifteen short chapters fill this slender 180 page volume, which is divided into four parts:

* Surface level improvements

* Simplifying loops and logic

* Reorganizing your code

* Selected topics

Important points are broken out as "Key Ideas," and while some are obvious or trite, many are pithy. The very first is code should be easy to understand, which pretty much sums up the book's entire philosophy. I do wish there had been an appendix listing all of the key ideas.

Plenty of examples are given, in a variety of languages including C, C++, Python, Java and HTTP. Most of the ideas do apply to C.

The authors' treatment of names is spot on, and I'll bet many developers have never thought about some of the ideas. For instance, does CART_TOO_BIG_LIMIT = 10 mean the cart can have 10 items or 9? The name is ambiguous, and they suggest using max and min for limiting values.

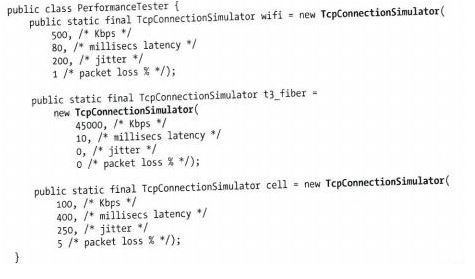

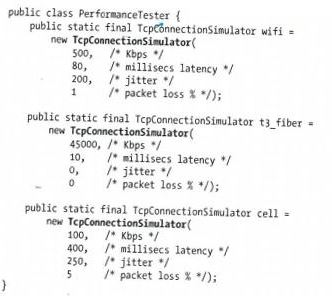

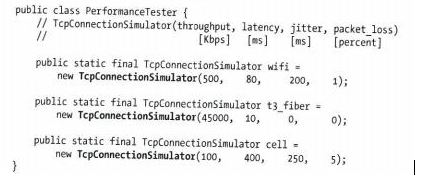

They refer to the overall look of a function as its "silhouette." They take this code:

. . . and improve the silhouette as follows:

. . . then take it a bit further:

The last one sure looks nicer than the first.

Their central Key Idea about comments is "The purpose of commenting is to help the reader know as much as the writer did." I'd add "at the time he wrote the code" as memories are feeble.

Interestingly, they do advocate using multiple returns and gotos where they might clean up what would otherwise be convoluted code. MISRA C advises against these constructs, rather than prohibiting them. However, some safety-critical standards require that functions have a single point of return.

A very brief bit of prose and an example touch on using De Morgan's laws to simplify logical structures. In school EEs De Morganize Boolean expressions ad nauseam; I wonder if the CS folks learn these useful techniques? If you're not familiar with De Morgan, this is a good site.

The example given is:

if (!(file_exists && !is_protected)) do something;which can be De Morganded to the easier to understand:

if (!file_exists || is_protected) do something;Chapter 12, "Turning Thoughts Into Code" recommends writing out in English exactly what a function is supposed to do before coding it. The idea of understanding a function's behavior before typing in C is very old, though in some corners today is getting deprecated. Unfortunately.

Many amusing cartoons, some pertinent, are scattered throughout.

The price of $29 from Amazon is too high for this very-quickly read book. But it is a gem and offers a lot of very solid advice. I recommend it.

Balancing Agility and Discipline: A Guide for the Perplexed , by Barry Boehm and Richard Turner

While at the Agile 2007 conference in Washington DC a few months ago I picked up a copy of Balancing Agility and Discipline, by Barry Boehm and Richard Turner. It's enticingly subtitled "A Guide for the Perplexed."

The title itself is a bit perplexing as most experienced agilists endorse practicing the agile methods with discipline, and will rail against those who plaster an 'agile' label over a shop that is really using glorified hacking.

The book starts with an overview of the software engineering landscape and delves lightly into the two, often at-odds, camps of agile 'versus' plan-driven approaches. They do a good and balanced job of dispelling myths surrounding both, myths that inevitably arise when agendas displace careful reasoning. From there it looks at typical days in the lives of practitioners of the two approaches, and then examines a couple of case studies. Large projects, all found that some combination of agility and conventional approaches were needed.

And perhaps that''s the principle message of the book: as projects grow, successful teams draw on the best practices of both plan-driven and agile approaches.

The real meat is in chapter 5 in which they describe a risk-analysis approach to help developers and managers find a reasonable, balanced approach based on five parameters about a project and the team. Those are: the quality of the developers, the size of the team, the rate at which requirements change, the impact of a failure, and the nature of the team''s culture.

The main text ends after just 160 pages, but another 70 of appendices will be of interest to some. One is a very superficial introduction to a lot of agile methods. Another describes the capability maturity model. If you''re not familiar with these methods read these chapters before tackling the book as the authors assume some familiarity with both approaches. It doesn''t hurt to have a sense of the team software process as well.

$40 is a lot to pay for the couple of hours one will spend reading this book, especially when much of it is a rehash of information most of us already know. I''d sure like to see their risk analysis approach boiled down to a long article. But the book is interesting and illuminating. The authors, having no ax to grind, dispassionately examine the benefits of both software development approaches.

Bebop to the Boolean Boogie, Clive Maxfield

Bebop is the MTV version of an embedded how-to book. It's fun. It's a fast read. You'll find neither calculus nor much about basic electronics. It's focus is entirely on logic design, and is designed as a primer for those without much grounding in this area.

Hardware designers who have been at this for a couple of years probably have the material down pat. It seems, though, that the embedded world is evolving into two camps - digital design and firmware - with less and less communication between the two. Increasing specialization means there are fewer people who can deal with system-wide problems. If you are an embedded software guru who just doesn't understand the electronics part of the profession, then get this book and spend a few delightful hours getting a good grounding in digital design basics. Then watch the startled looks as your water cooler discussions include comments about state machine design.

Bebop covers all the basic bases, from the history of number systems (much more interesting than the tiresome number system discussions found in all elementary texts), to basic logic design, PALs, and even PCB issues. I often run into engineers who have no idea how chips are made - the book gives a great, wonderfully readable, overview of the process.

Its discussion of memories is fast-paced and worthwhile. A chapter about DRAM RAS/CAS operation would be a nice addition, as would something about Flash, but there's a limit to what you can pack into 450 pages.

I found the chapter about Linear Feedback Shift Registers the most interesting. This subject never goes away. It pops up constantly on the embedded systems Internet listservers (comp.realtime and comp.arch.embedded), often under the guise of CRCs. These pages are worthwhile even for experienced digital engineers.

And yes, the seafood gumbo recipe in Appendix H is worth the price of the book alone.

Better Embedded Systems Software, Phil Koopman

Phil Koopman has a new book out as well, titled Better Embedded System Software. I don't know Phil personally but we have been corresponding for years. His work includes a lot of research into dependable embedded systems, and I recommend his papers on the subject at http://www.ece.cmu.edu/~koopman/.

Better Embedded System Software is a broad work covering firmware development from soup to nuts. Some might view it as an anti-agile tome as Phil goes on at length about paperwork and other associated work products that are often scorned by some in the agile community. Yet he's right. In the decades I've worked with developers I've seen that one of the biggest causes of failed projects is a lack of planning, a dearth of forethought, and the complete abandonment of documentation. Is this anti-agile? I don't think so; in my opinion there's a balance that needs to be struck between a total focus on the code and one that is more plan-driven. And that balance will vary somewhat depending on the nature of the product.

Better Embedded System Software includes five chapters about requirements, a subject that is universally-neglected in most firmware books (including mine). Yet if you don't know what you're trying to build - in some detail - you can't build it.

The chapters on engineering a real-time system are worth the price of admission. One succinctly discusses a number of difference scheduling approaches, and unusually for an embedded book, shows how to do the math to understand schedulability issues. And everyone knows it's costly to build systems with few spare resources... but Phil gives a number of graphs that put numbers to the anecdotes. His thoughts on globals, mutexes and data concurrency are essential reading for anyone who has not (yet) run into the nearly-impossible-to-find challenges that result from poor use of shared resources.

The book delves into the important and oft-neglected math behind reliability, MTBF and redundancy. He doesn't mention Nancy Leveson's work on redundancy. She found that having multiple systems built by independent teams may not offer much of an advantage, since so many problems stem from errors in requirements.

The chapters are short, pithy, and full of specific recommendations, the highlights of which are displayed as take-aways in boxes that jump from the page. Alas, it's not a pretty book; the publisher should have enshrined such great content in a more attractive volume.

I advise reading James Grenning's book in concert with Phil's. The two advocate somewhat different, though not incompatible, approaches to development. Which is right? Both and neither. Each team has their own needs. Wise engineers will be familiar with many approaches, and till pick the best practices for their needs from the firehose of ideas.

Building Parallel, Embedded, and Real-Time Applications with Ada, John McCormick, Frank Singhoff and Jerome Hugues

"Building Parallel, Embedded, and Real-Time Applications with Ada," by John McCormick, Frank Singhoff and Jerome Hugues is a new book on Ada that's specifically meant for firmware developers.

This book does teach the Ada language, to a degree. But Ada newbies will find some of it baffling. The prose will clearly explain a code snippet but still leave the uninitiated puzzled. For instance, when this line is first used:

with Ada.TextIO; use Ada.TextIO;... the first portion is well-explained, but one is left wondering about meaning of the apparent duplication of Ada.TextIO.

The authors recommend using one of a number of other books for an introduction to the language, and they give a number of specific suggestions. Also suggested is the Ada Reference Manual (ARM), which is truly complete. And enormous (947 pages). And not organized for the novice. I find the ARM more accessible than the C standard, but it's not a fun beach read. Actually, it's not much fun at all.

But chapter 2 of "Building Parallel, Embedded, and Real-Time Applications with Ada" does contain a good high-level introduction to the language, and the section on types is something every non-Ada programmer should read. Many of us grew up on assembly language and C, both of which have weak-to-nonexistent typing. If you've vaguely heard about Ada's strong types you probably don't really understand just how compelling this feature is. One example is fixed-point, a notion that's commonly used in DSP applications. On most processors fixed point's big advantage is that it's much faster than floating point. But in Ada fixed point has been greatly extended. Want to do financial math? Floats are out due to rounding problems. Use Ada's fixed point and just specify increments of 0.01 (i.e., one cent) between legal numeric representations. Ada will ensure numbers never wind up as a fraction of a cent.

About a quarter of the way into the book the subject matter moves from Ada in general to using Ada in embedded, real-time systems, which seems to get little coverage elsewhere even though the language is probably used more in the embedded world than anywhere else. Strong typing can make handling bits and memory mapped I/O a hassle, but the book addresses this concern and the solutions ("solutions" is perhaps the wrong word as the language has resources to deal with these low-level issues) are frankly beautiful.

I've always like David Simon's "An Embedded Software Primer," for its great coverage of real-time issues. "Building Parallel, Embedded, and Real-Time Applications with Ada" is better at the same topics, though is a more demanding read. The two chapters on communications and synchronization are brilliant.

Chapter 6 covers distributed systems, one of Ada's strengths. The couple of other Ada books I have don't mention distributed systems at all, yet computing has been taken over by networks of computers and many-core processors. For instance, Ada provides pragmas and other resources to control activities distributed among various processors either synchronously or asynchronously. The book does a good job of showing how CORBA can enhance some of the ambiguity and unspecified mechanisms in Ada's Distributed Systems Annex (an extension to the Ada standard). This is not simple stuff; the book is excellent at getting the ideas across but expect to be challenged while digging through the example code.

Chapter 7 is "Real-time systems and scheduling concepts." It's a must-read for anyone building real-time systems in any language. The authors cover rate-monotonic and earliest-deadline-first scheduling better than any other resource I've read. They show how one can use a little math to figure worst case response time and other factors. Ada is not mentioned. But later chapters show how to use Ada with multitasking. Also somewhat unusual in Ada tomes, the book does cover the Ravenscar profile. Ravenscar disables some Ada features to reduce some of the overhead, and to make static analysis of a program's real-time behavior possible.

As most people know, Ada has its own multitasking model. But some of this is specified by the standard as optional, and so the book addresses using POSIX as an alternative.

The book is very well-written, though sometimes a bit academic. The use of language does tend towards precision at the occasional cost of ease-of-reading. Every chapter ends with a bulleted summary, which is excellent, and a set of exercises. I don't find that the latter adds anything as no solutions are given. In a classroom environment they would make sense.

A lot of us get set in our ways. Even if you never plan to use Ada it makes sense to stretch the neurons and explore other languages, other approaches to solving problems. Heck, a friend in Brazil gave me a book about the Lua language which was quite interesting, though I've never written a single line of Lua and probably never will. "Building Parallel, Embedded, and Real-Time Applications with Ada" is one of those volumes that makes you think, especially about the hard problems (like real-time, multitasking and multicore) facing the firmware world today. I recommend it.

Have you explored alternatives to C/C++? What do you think of them?

CMMI Survival Guide, Suzanne Garcia and Richard Turner

Then there''s the 'CMMI Survival Guide,' by Suzanne Garcia and Richard Turner. It''s not a step-by-step guide to the Capability Maturity Model Integration at all. In fact, there''s virtually no description of the CMMI, and the authors assume the reader has pretty deep knowledge of the subject, which is described in detail in a 700 page doc (!) from the Software Engineering Institute. The book falters as a result; CMMI-novices wishing to 'survive' making a transition to the CMMI will struggle to understand the authors'' advice.

The authors provide no compelling reasons to embrace the CMMI. Though heaps of praise for the process are given there are no actual or even anecdotal measures of how CMMI can help. You have to be pre-proselytized, so if you''re not already a CMMI convert you''ll quickly lose interest. For the book is not particularly engaging, and suffers from recursive book syndrome: you have to read the whole thing to get a sense of any part of it, a flaw the authors admit early on.

Each section starts with quotes that purport to be related to process improvement. Interestingly, these quotes are quite similar to the ones in Mike Cohn''s Agile Estimating book.

The book isn''t worth reading unless you''re going to start the CMMI. And even then read it only after you get some CMMI training, because the lingo will confuse novices. It has very good references to useful resources, and it gives a moderately useful, though tough to understand, roadmap to CMMI success.

This is not really like a self-help book; you''ll need a therapist (a process improvement consultant) as well.

If I were starting an outfit down the CMMI road I''d buy this book, and use it a little. Otherwise, give it a pass.

Computer Approximations, John Hart

Lest we forget, computers generally have no intrinsic knowledge of tangents and cosines. Either a runtime routine in a compiler's library implements an approximation routine or a co-processor (possibly buried inside the CPU's core) runs a hardware version of an approximation.

Many embedded applications make massive use of trig and other complex functions to massage raw data sampled by the system. Yet a number of these systems are bound by memory or processor constraints to the point where the programmer must write his own low level trig functions. Sometimes the overhead of a C's floating point library is just more than a small system can stand.

Don't believe me? In the last two weeks three friends called looking for trig approximation algorithms. OK - one writes embedded compilers, and needed to tune his runtime package. The others were all squeezing complex computations into the tight confines of 8 bit microcontrollers.

The bible of applied math is The CRC Standard Mathematical Tables (CRC Press, West Palm Beach, FL). Whenever I can't remember a basic identity drilled unsuccessfully into my unwilling head I'll pull this volume out. Its section on series expansions, for examples, gives formulas we can use for computing any trig function. For example, sin(x)=x-(x**3)/3! + (x**5)/5! - (x**7)/7!...

A little thought shows this series may be less useful than we'd hope. Limited computer resources means that at some point we'll have to stop summing terms. Stop too soon and get poor accuracy. Go on too long and get too much accuracy at the expense of computer time.

The art of computer approximations is establishing a balance between accuracy and computational burden. Nearly all commonly used approximations do use polynomials. The trick is figuring out the best mix of coefficients and terms. Though the math behind this is rather interesting, as practicing engineers who have to deliver a product yesterday most of us are more interested in canned solutions.

If you find yourself in a similar situation, run to the nearest Computer Literacy bookstore (or contact them on the Web at www.clbooks.com) and buy Computer Approximations by John F. Hart (1968, John Wiley & Sons, NY NY). To my knowledge there's no more complete book on the subject.

Though the book contains a detailed analysis of the art of approximating, the meat is in it's Tables of Coefficients. There you'll find 150 pages of polynomial coefficients for square and cube roots, exponentials, trig and inverse trig, and logs. The coolest part of this is the large number of solutions for each function: do you want a slow accurate answer or a fast fuzzy one? Hart gives you your choice.

It's not the easiest book to use. Mr. Hart assumes we're all mathematical whizzes, making some of it heavy going. Do skim over the math section, and figure out Hart's basic approach to implementing an approximation. His notation is quite baffling: until I figured out that (+3) +.19400 means multiply .194 times 10**3 none of my code worked.

Unhappily this book is out of print. Sometimes you can find a used copy around; check Amazon (try this link) and other vendors. Also check out my free app note here.

Debugging, David Agans

This review was published in May 2011

Most book reviewers only tackle new releases. Not me; one of these days I want to review De Architectura by Marcus Vitruvius Pollio. Published around 25 BC it's possibly the oldest book about engineering. My Latin is pretty rusty but thankfully it's available in English from Project Gutenberg.

Debugging, by David Agans, is a bit more recent, having been published in 2002. In it, he extols his nine rules of troubleshooting anything, though the focus is really on hardware and software. The rules are:

- Understand the System

- Make it fail

- Quit thinking and look

- Divide and conquer

- Change one thing a time

- Keep an audit trail

- Check the plug

- Get a fresh view

- If you didn't fix it, it ain't fixedDave devotes a chapter to each rule, adding a couple of bonus sections at the end, including a very helpful "View from the help desk." Diagnosing problems over the phone is especially challenging.

The book is well-written, folksy, and a very quick read. It's packed - packed - with war stories, most of which do a great job of illustrating a point.

Mostly I found myself nodding in agreement with his thoughts. However, he advocates reading the manual/databooks from cover to cover when looking for problems. That's great advice. but given today's 500 page datasheets and the deadline's screaming demands it's impractical in many cases. Geez, the OMAP datasheets are around 5000 pages each.

He also believes in "knowing your tools." Great advice. As an ex-tool vendor I was always frustrated by so many customers who mastered 5% of the product's capabilities, when other features would be so helpful. Alas, that problem probably ties into the 500 page datasheet/screaming deadline challenge. But so many tools offer so much capability that it makes sense to learn more than the minimum needed to set a breakpoint or to scope a signal.

He asks: "Did you fix it or did you get lucky?" And then says: "It never goes away by itself." Absolutely. A problem that mysteriously disappears inevitably mysteriously reappears, usually at the worst possible time.

Though old the book remains very topical, with just a tiny bit of dated material (like advice to be wary of in-circuit emulators, which are far less common now than of yore).

Reading this brought back many memories. His aphorisms, like "use a rifle, not a shotgun" were driven into us young engineers long ago and are still valid. Another oldie that's a favorite: "The shortest pencil is longer than the longest memory" (i.e., take notes). So anyone with experience will say "sure, I know all this stuff - why read the book?" The answer is that we may know it all, but it's too-often disorganized knowledge not used with discipline. Just as a tent revival meeting doesn't teach anyone anything they don't know, the book takes the known and organizes that knowledge, driving the message in deeply.For newbies the book is even more important. Every new grad should read this.

It's interesting and fun. Recommended.

The Definitive Guide to the ARM Cortex-M3, Joseph Yiu

The kind folks at Newnes sent me a copy of Joseph Yiu's 2007 book "The Definitive Guide to the ARM Cortex-M3," which is, as the title suggests, all about ARM's newish embedded processor.

The Cortex-M3 is an important departure for ARM. It's tightly focused on embedded applications, particularly for microcontrollers. As such there's no cache (though licensees, the vendors who translate ARM IP into actual microcontrollers, can add it). Cache, while a nice speed enhancer, always brings significant determinism problems to real-time systems. The Cortex-M3 does not support the ARM instruction set, using Thumb-2 instructions to enhance code density. This architecture also includes a very sophisticated interrupt controller well-tailored to the needs of deeply-embedded real-time systems.

Given the importance of the Cortex-M3 this book fills an essential void. Sure, there's more detail available in data from ARM, but those data sheets are so huge it's hard to get a general sense of the nature of the processor.

Alas, the book has numerous typos and grammatical mistakes. Possibly the most egregious is in a sidebar which attempts to explain the difference between "bit-banding" and "bit-banging," where the author mixes up the two terms and defines bit-banding as the software control of a hardware pin to simulate a UART. But in general the errors are more annoying than important.

I found the stream of "this might be" references to features a bit frustrating, but in fact the author is correct in his hesitancy. For ARM sells IP, not hardware, and it's up to the licensees to decide which features go into a particular device. And there's little on I/O for the same reason.

Unlike ARM's datasheets, the book includes very useful chapters on porting code from the ARM7 and setting up a development environment using Keil and GNU toolchains. For many developers the latter alone will make the book worthwhile.

An appendix lists the instruction set. Some instructions have very concise and detailed descriptions. Others, unfortunately, don't.

If you're not planning to use the Cortex-M3, but want to get insight into what will be an important arena in the embedded world, this book is the best resource I know. If you are using that architecture, it's sort of a "Cortex-Lite" but very useful introduction that you'll have to augment with the datasheets.

Digital Apollo, David Mindell

My dad was surprised to find that it was illegal to shovel Jones Beach sand into the back of his station wagon. Was he going to jail?

It was 1958. Two successful and surprising Sputnik launches had energized the political class and spawned engineering efforts to match or beat the Soviet's success. All the US had managed to loft was Explorer 1, which, at 31 pounds, was a mere 3% of the mass of Sputnik 2. But even in those very early days of spaceflight, when getting anything into even a near-Earth orbit seemed impossibly difficult, work was being done on a manned lunar landing. At Grumman on Long Island my dad was doing pre-proposal landing studies. They thought sand could simulate the lunar surface. The cop somehow didn't believe his wild story about traveling to the moon, but simply issued a warning and moved on, no doubt shaking his head in disbelief.

The word "lunatic" comes to mind.

But just over a decade later two astronauts managed to land and return safely. In another couple of years the country was bored by the concept; I remember watching a later mission blasting off in a quarter of the TV screen while a football game filled the rest.

It took just a decade to go from no space capability, through early manned launches, to a successful moon landing.Half a century later, we haven't been able to repeat that.

How did Kennedy's mandate succeed, especially given the primitive technology of the era? How did armies of mostly young engineers invent the spacecraft, the web of ground support infrastructure, and the designs of the missions themselves in such a short time?

The rich history of space exploration has been well-told in many books. My favorites include Carrying the Fire: An Astronaut's Journeys, by Michael Collins, the lonely pilot who stayed in orbit during Apollo 11's descent. Of all of the astronaut autobiographies this is the most literate and finely crafted.

Then there's Apollo 13 by Jeffrey Kluger and James Lovell. Lovell, of course, was the commander of that ill-fated mission. Though sometimes seen as a disaster, 13 strikes me as a Macgyver-esque tale of success. After big explosion 200,000 miles from Earth, in the depths of space, their consumables running out, the crew and engineers on the ground cobbled together fixes that brought the mission home. That the fragile craft could sustain such major damage is a testimony to everyone involved in the program.

Tom Wolfe's The Right Stuff is the story of the astronauts themselves. Though innumerable books laud their bravery Wolfe captures the existential nature of the test pilot catapulted into a situation where mistakes just aren't an option. Like the others I've mentioned, it's highly readable and engrossing.

But these books, like most of the others on the market, are largely about the pilots who rode into space. They're shy on technical details. Just how did we navigate to the moon, anyway? Whizzing along at 2000 miles/hour it moves about a quarter pi radians around the Earth in the three days of flight. How did managers grow the program from a dream to hundreds of thousands of workers and $25 billion in so few years?

Enter Apollo: The Race to the Moon by Charles Murray and Catherine Bly Cox. This page-turner (at least for geeks) juxtaposes a picture of a Mercury capsule being haphazardly trucked to the pad, swaddled in mattresses, with the monstrous crawler-transporter ponderously moving a Saturn V. But sadly, the book is out of print and used editions on Amazon start at a whopping $74.

One might think that after 40 years this subject would be exhausted, but Bill Schweber of Planet Analog recently alerted me about a new book called Digital Apollo by David Mindell. It falls into the ranks of the best Apollo books for geeks.

It's not unusual for a space book to reach back to the story of the X-15 or even Chuck Yeager's X-1 record-breaking flight, but in Digital Apollo Mindell starts with the story of the Wright Brothers. Yes, they are generally credited with inventing the airplane, but he focuses only on their experiments with wing warping and other forms of control. For the Wrights's pioneering work succeeded largely because they invented ways to steer a flying machine in three axis. And control, particularly as manifested in the Apollo Guidance Computer, is one of the two overarching themes of the book.

Digital Apollo starts with an initially somewhat boring investigation into test pilot psychology. Those flyers formed the cadre from which most of the astronauts were selected. Why should a modern reader care about some jet jockies' inflated self-esteem? Mindell skillfully weaves that discussion into the nature of aircraft stability, instrumentation, and then into his second major theme: Who should control the vehicle? The pilot, or a computer?

He avoids the obvious question of why, if the automata are so good, we bother to include a person in the spaceship. That essentially political and philosophical debate belongs elsewhere. But it certainly goes to the nature of being human and what exploration means in the virtual age. Mindell does end with a captivating description of the 2004 meeting of the Explorer's Club where famous folk like Aldrin, Piccard (not the one of Star Trek fame) and Hillary (not the one in the news) listened to Pathfinder's Stephen Squyres give a talk about exploring Mars while comfortably seated in an air-conditioned lab.

At the very first meeting of the Society of Experimental Test Pilots in 1957 some attendees expressed fear that they would be automated out of a job. Throughout the book it becomes clear that much of the engineering of the various spacecraft was dominated by the tension between traditional rocketeers, who felt everything should be automated, and the pilots. Some Mercury astronauts wanted to fly the rocket right off the pad.

If control is just keeping a needle centered on a gauge why stick a heavy human in the control loop? But those with the Right Stuff want to be in control, to take heroic action, and those desires impacted the space program in the 60s and still do today.

The USSR believed in automation and their authoritarian culture brooked no interference from mere cosmonauts. Gagarin, the first man in space, was blocked from his spacecraft's manual controls by a combination lock whose code was in a sealed envelope!

Despite Mercury being the smallest and most primitive of the Apollo triptych, pilots of those first six missions had the least control of all. All important operations were automatically sequenced. System failures forced Scott Carpenter to assume manual control. He saved the mission, but various delays pushed Aurora 7 some 250 miles off course. Some saw this as a success for those advocating the need for the pilot in the loop; others, loudly, blasted him for poor performance.The astronaut office rebelled at Mercury's spam-in-a-can design. On Gemini each mission's dynamic duo still had only systems management responsibility on launch, but were an integral part of the control loop in orbit. Armstrong and Scott saved their Gemini by reading a magnetic tape with reentry procedures into the computer.

The hero had been redefined as a boot loader.

About a hundred fascinating pages into the book Mindell begins to look at Apollo, and specifically the Apollo Guidance Computer (AGC), which was, other than software, virtually the same as the LM Guidance Computer. Recognizing the difficulty of controlling a spacecraft from the Earth to Lunar orbit, and thence down to the surface, NASA let the very first Apollo contract not to the spacecraft vendor, but to MIT's Instrumentation Lab for the AGC.

Oddly, everyone forgot about the code. The statement of work for the AGC said this about software: "The onboard guidance computer must be programmed to control the other guidance subsystems and to implement the various guidance schemes." That's it. Lousy requirements are not a new problem.

Another phenomenon that's not new: once management realized that some sort of software was needed, they constructed a procedure called a GSOP to specify each particular mission's needs. But in practice GSOPs were constructed from the code after it was written, rather than before.

If Doxygen had been around they could have saved a lot of time.

Flash memory hadn't been invented. Nor had EPROM, EEPROM or any of the other parts we use to store code. For reasons not described in the book (primarily size) traditional core memory wasn't an option, so the AGC stored its programs in core rope. Programs were "manufactured" -- the team had to submit completed tapes three months before launch so women could weave the programs into the core ropes that were then potted. Once built it was extremely difficult to change even a single bit.

The code was written in an interpreted language called BASIC, though it bore no similarity to the BASIC produced by Dartmouth. Listings of the AGC code show that even in the 60s software engineers used random names for labels, like KOOLADE and SMOOCH, the latter suggesting perhaps too many hours at the office.

There was never enough memory (32K of program space). Then, like now, developers spent an inordinate amount of time optimizing and rewriting code to fit into the limited space. Consider that a tiny 32K program controlled the attitude indicator, all thrusting and guidance, a user interface, and much more.

And what was the user interface? Parts of the UI included windows (not on a desktop, but those glass things) that were etched with navigational info. The computer prompted the astronaut with angle data; he looked out through the markings and designated new targeting parameters to the computer. Did the computer have an UI... or did the pilot? Who was driving what? The pilot was just another component in the system.

Mindell paints the moon flights as a background patina to the guidance theme. An entire chapter is devoted to Apollo 11, but other describing how the astronauts used the stars to align the inertial reference platform the author ignores the flight to and from the moon. The chapter covers the landing sequence in detail -- some might say excruciating detail but I was riveted. Most remember the 1201 and 1202 alarms that caused great concern. It turns out that Aldrin wanted to leave the rendezvous radar (which was only needed to seek out the command module) turned on in case an emergency abort was needed. Program management signed off on this change, but no one told the software engineers. Data from the radar demanded computer attention that was in short supply, so it flashed the 1201 and 1202 codes indicating "I'm getting overwhelmed!" The AGC rejected low priority tasks but ran the landing activities correctly. That's pretty slick software engineering.

A single chapter covers the descent and landing phases of the next five missions (Apollo 13 is left out as it never entered lunar orbit). Many are familiar with the abort switch failure, later traced to a blob of solder, that could have ended 14's mission. That's described brilliantly, but the software patch is glossed over in a short barrage of words that left me feeling the author really didn't understand the fix.

This book is short on deep technical details about the AGC. For more on that see Journey to the Moon: The History of the Apollo Guidance Computer by Eldon C. Hall. But it provides a fascinating look at the human side of engineering, and how pilot demands (think "customer demands"), both reasonable and not, effect how we build systems. Those inputs, Mindell thinks, largely shaped the design of the Space Shuttle. He quotes astronaut Walter Cunningham about splashing down in the sea in Apollo: "[the crew were recovered] by helicopter like a bag of cats saved from a watery grave." And Cunningham's take on Shuttle: "[it] makes a smooth landing at the destination airport and the flight crew steps down from the spacecraft in front of a waiting throng in a dignified and properly heroic manner."

All in all a great read recommended to any techie fan of the space program.

The Digital I/O Handbook, Jon Titus and Tom O'Hanlan

The Digital I/O Handbook, by Jon Titus and Tom O'Hanlan, (ISBN: 09759994-0-0) is a 75 page introduction to using digital inputs and outputs with microprocessors.The book starts with a quick introduction to logic, which emphasizes the electrical, rather than the Boolean, nature of real devices.A chapter on outputs is equally practical. The authors talk about using buffer chips and transistor circuits to drive relays (solid state and otherwise) and optoisolators. The chapter on inputs talks about real-world problems like bounce and circuit isolation. You'll learn how to compute the values of pull-ups, LED resistors, and the like.The final chapter on interfacing to sensors walks the reader through using thermal switches, Hall-effect sensors, encoders and more.

What I like most about the book is its mix of hardware and software. Most pages have a bit of code plus a schematic. All code snippets are in C.This is a great introduction to the tough subject of tying a computer to the real world. It's the sort of quick-start of real value to people with no experience in the field.

The Elements of Style, William Strunk and E. B. White

Software has two missions: to DO something, and to COMMUNICATE the programmer's intent to future maintainers, or to people wishing to reuse portions of the code. In my opinion, any bit of code that doesn't do BOTH of these things really well is totally unacceptable.

But the code itself, the C, C++ or whatever, is not particularly easy to read. Well written comments are the basic structure of any program. The comments describe the intent, the complexities, the tricks and the issues. Code just obfuscates these. Great comments are a basic ingredient of great code.

That means the comments have to be well written, in English (at least for those of us working in English-speaking countries), using the noun-verb form. Each sentence starts with an upper-case letter; the rest of the sentence uses appropriate cases. Comments that don't conform to the basic rules of grammar are flawed.

Donald Knuth talked about this at length. He felt that the literate programmer:

Is concerned with the excellence of style

Can be regarded as an essayist

With thesaurus in hand, chooses variable names carefully

Strives for a comprehensible program

Introduces elements in a way that's best for human understanding, not computer compilation.

And so, I've long believed the best programming book ever is 'The Elements of Style,' by William Strunk and E. B. White (Allyn & Bacon; ISBN: 020530902X). In a mere 105 pages the authors tell us how to write well. Just five bucks from Amazon.com. Or, you can get it for free at http://www.bartleby.com/141/index.html.

Unfortunately, most developers are notoriously bad at writing prose. We NEED the help that this book contains.

One rule: 'Use active voice'. Doesn't that sound better than 'the use of the active voice is required'? It's also shorter and thus easier to write! Another: 'Omit needless words,' a great improvement over 'writers will examine each sentence and identify, characterize, and excise words in excess of those essential to conveying the author's intent.'

An appendix lists words that are often misused. English is quite a quirky language; it's easy to make really stupid mistakes. One example: mixing up the verb "effect", the noun "effect" and " ?affect".

The book makes an interesting comment about signing letters: 'Thanking you in advance.' This sounds as if the writer meant, "It will not be worth my while to write to you again." Instead write, "Thanking you," and if the favor which you have requested is granted, write a letter of acknowledgment. I like that!

My biggest pet peeve about poor writing is mixing up 'your' and 'you're.' I've seen billboards with these words confused ' talk about advertising your ignorance!

The book gives 18 simple rules, far fewer than the nuns attempted to beat into my brain so long ago. Follow them and your comments, and thus the code, will improve.

Embedded Ethernet and Internet Complete, Jan Axelson

Jan Axelson's latest book extends her coverage of communications protocols from RS-232 to the PC's parallel port to USB to (in this volume) Ethernet and the Internet. Like all of her works, it's clear and complete.

Ms. Axelson starts with a brief overview of the basics of networking, with an overview of the datagrams, protocols and required hardware. She goes on to describe how routing is accomplished using both UDP and TCP/IP. Technical readers will already be familiar with much of this information, but it's presented in a very readable fashion that's easy to understand.

Much of the book covers serving up Web pages and working with dynamic data on the web. Sure, there's been plenty of coverage in hundreds of books of these subjects, but she presents everything in the context of embedded systems, especially systems with limited resources. And that's where the real strength of this book lies.

Like her previous work, this is a practical, how to use networking in an embedded system book. Two primary hardware platforms are covered: the Dallas TINI and Rabbit Semiconductor's RCM3200. I've used the Rabbit parts a lot, and find them powerful and fun to work with.

If you're already a networking whiz this book probably won't add much to your knowledge. But for those anxious to learn how to add Ethernet and/or Internet connectivity to any embedded system, especially small ones, the practical examples and clear writing will get you going quickly.

Embedded Ethernet and Internet Complete by Jan Axelson, ISBN# 1-931448-00-0.

Embedded Linux Primer - A Practical Real World Approach, Christopher Hallinan

The numbers are impressive: 45% of developers working on 32 bit processors report using Linux in an embedded app. And Wind River recently bought FSMLab''s IP for the embedded space, giving WRI a 'hard' real-time Linux distribution to complement VxWorks and their soft real-time Linux. I put 'hard' in quotes as the various Linux vendors continue to slug out the notion of real-time in the Linux environment. See 'Hard'real-time Linux deal under scrutiny' in the Feb 26, 2007 issue of EE Times for more on the imbroglio.

Now there''s a book about putting the OS into firmware. 'Embedded Linux Primer, A Practical Real-World Approach' by Christopher Hallinan is one of those few books whose contents exactly match the title. It makes no attempt to introduce Linux to newbies, and is entirely focused on getting Linux running on an embedded system.

The chapter on building the kernel is interesting by itself as it gives some insight into how Linux is structured. Out of general curiosity I would have liked more information, from a higher-level perspective, but this is how-to tome instead presents the details needed to do the work.

A long section on processors was not particularly useful. A lot of CPUs will run the OS, and the author doesn''t point out particular advantages or downsides to any of the choices presented.

Bootloaders, including details of the ever-more-popular GRUB get good coverage, as does Linux''s initialization sequence so one can understand what''s going on as it spits out those 100+ lines of sometimes cryptic startup dialog.

The chapter on writing device drivers is short and could have been fleshed out in more detail, but does work through a useful example.

Most of the chapter on tools is too abbreviated to be of serious use to serious users of gdb and the like. But the chapter on debugging the kernel is worth the price of the book. Better, there''s a section about what to do if your gleaming new OS doesn''t boot.

Real-time issues are a real concern to a lot of firmware folks, and the author does a great job explaining both the problems, and the solutions, including some profiling data to get a bit of tantalizing insight into what sort of latencies are possible. Unfortunately, the author doesn''t list the platform, so one is left wondering if a 78 usec worst-case latency applies to a 3 GHz Pentium'- or a 100 MHz ARM.

This book is not a beach read. It''s complex stuff. You''ll have a hard time finding many pages that aren''t crammed with directory listings, code, make file extracts, and the arcane argot of those delving deep into Linux. But the author writes well and informatively. If you''re planning to stuff Linux into your embedded system, get the book.

Embedded Systems Building Blocks, Jean LaBrosse

Jean LaBrosse is back with an update of his 'Embedded Systems Building Blocks' (R&D Books, ISBN 0-87930-604-1). I've always been a fan of his articles and books, and think this latest is a valuable addition to any embedded library.

The book is a collection of drivers for some of the more common embedded problems. It starts with a 40 page introduction to managing real time problems, largely in the context of using a RTOS. This section alone is worthwhile for anyone trying to learn about using an RTOS, though I'd also recommend getting his 'uC/OS-II, The Real Time Kernel'

Included code (also on the companion CD-ROM) covers the following:

Keyboard handler, for keypads arranged in matrices that are software scanned.

Seven segment LED driver, for multiplexed arrays of LEDs

A complete LCD driver package for units based on Hitachi's HD44780 chip

A time of day clock package, which manages time in an year:month:day and hours:minutes:seconds format. It's Y2K compliant, to boot!

If your real time code works with multiple delay and timeout issues, his timer manager is a useful chunk of code. It manages up to 250 software timers; all are driven off a single timer interrupt source. His example shows this running from a 1/10 second interrupt rate; I'd be interested to see if it can be scaled to higher rates on reasonably small CPUs.

Discrete I/O drivers for inputs and outputs, with edge detection code. I like the way he abstracts the hardware to 'logical channels', which makes it so much easier to change things, and to create software stubs for testing before hardware is available.

Fixed point math ' one of the best discussions I've seen on this subject, which is critical to many smaller embedded apps. Fixed point is a sort of poor man's floating point: much smaller code that runs very fast, but you sacrifice resolution and range.

ADC and DAC drivers, with a good discussion of managing these beasts in engineering units rather than un-scaled bits.

An appendix includes Jean's programming conventions, a firmware standard. I passionately feel that firmware standards are the starting point of writing decent code. An alternative standard is available at www.ganssle.com/misc/fsm.doc.

Much of the code is targeted at applications using the uC/OS RTOS. It's rather easy to port the code to any RTOS, and in many cases even to use it with no RTOS (though you'll have to delete some of the OS function calls).

When I read the first version of his book my gut reaction was 'well, I could write this stuff easily myself.' That's true; most of this code is not terribly complex. But why bother? Why re-invent the wheel? The best developers find ways to buy, recycle, and borrow code, rather than write every last routine.

On to more book reviews.